This is about research I did a while ago but only now had time to finally write about.

In the beginning of this year I was curious to see how many Puppet 3 servers – back then freshly end of life – were connected directly to the internet:

Puppet 3.x is now end of life, happy updating! shodan still finds 15k version 3 servers online – not even firewalled 😀

— Roman (@faker_) January 1, 2017

If you don’t know Puppet: It’s a configuration management system that contains information to deploy systems and services in your infrastructure. You write code which defines how a system should be configured e.g. which software to install, which users to deploy, how a service is configured, etc.

It typically uses a client-server model, the clients periodically pull the configuration (“catalog”) from their configured server and apply it locally. Everything is transferred over TLS encrypted connections.

Puppet uses TLS client certificates to authenticate nodes. When a client (“puppet agent”) connects for the first time to a server it will generate a key locally and submit a certificate signing request to the server.

A operator needs to sign the certificate and from that point on the agent can pull its configuration from the server.

However it’s possible to configure Puppet server to simply automatically sign all incoming CSRs.

This is obviously not recommended to do unless you want anyone to get possibly sensitive information about your infrastructure. The Puppet documentation mentions several times that this is insecure: https://docs.puppet.com/puppet/3.8/ssl_autosign.html#nave-autosigning

First I was interested if anyone is already looking for servers configured like this.

I’ve setup a honeypot Puppet server with auto-signing enabled and waited.

But after months there still was not a single CSR submitted, only port scanners tried to connect to it.

I’ve decided to look for those servers myself.

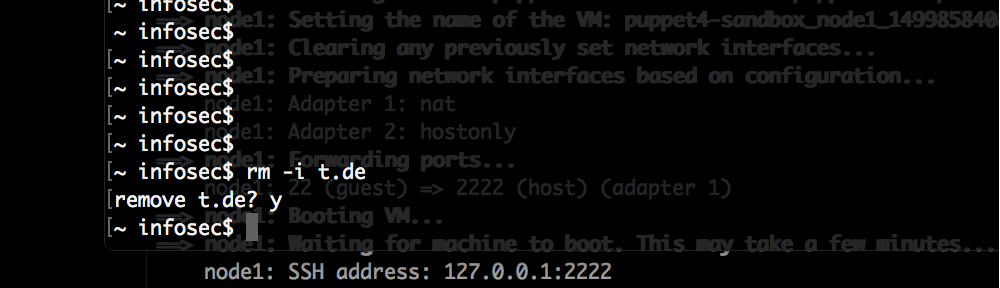

With a small script I looped over around 2500 still online servers – there were still more but due to time constraints I only checked 2500. I’ve built a system which connects to all of those systems and submits a CSR. The “certname” – this is what operators will see when reviewing CSRs – was always the same and it was pretty obvious that it is not a legitimate request.

An attacker would do more recon, get the FQDNs of the Puppet server from its certificate and try to guess a more likely name.

Out of those 2500 servers:

89 immediately signed our certificate.

Out of those 89:

50 compiled a valid catalog that could have been applied directly.

39 tried to compile a catalog and failed with issues that could potentially be worked around on the client but no time was spent on that.

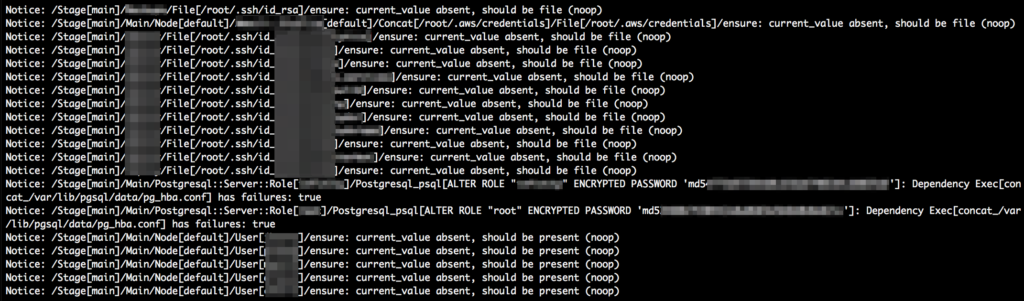

It is a normal setup to have a default role. If a unknown node connects it may get only this configuration applied. Usually this deploys the Administrator user accounts and sets generic settings. This happened a lot and that is already problematic since the system accounts also get their password hash configured which now could be brute-forced. And a lot of them also conveniently send along their sudoers configuration. An attacker could target higher privileged accounts directly.

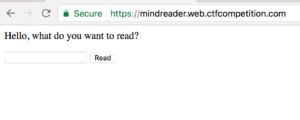

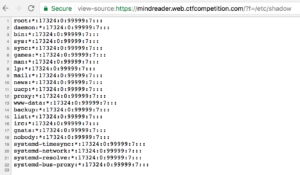

But some of those servers assigned more generic roles automatically. Exposing root SSH keys, AWS credentials, configuration files of their services (Nginx, PostgreSQL, MySQL, …), tried to copy source code to it, passwords / password hashes of database and other service users:

And more. There are systems that could be immediately compromised with the information that they leak. The others at least tell attackers a lot about the system which makes further attacks much easier.

Here is where it gets interesting: One day later I connected again to the same 2500 servers using the same keys from the day before trying to retrieve a catalog. Normally we’d expect the number now to be stable or slightly less. But in this case it’s not:

145 servers allowed us to connect.

58 gave us a valid catalog that could be applied.

And one week later:

159 servers allowed us to connect.

63 gave us a valid catalog that could be applied.

Those servers were not offline during the first round either. They simply signed my CSR in the meantime without noticing that it’s none of their requests or I suspect that this is the combination of the following two items:

1) Generally when you are working with Puppet certificates you’ll be using “puppet cert $sub-command” to handle the TLS operations for you.

The problem is, that there is no way to tell it to simply reject a signing request.

You can “revoke” or “clean” certificates but it has to be signed first (see: https://tickets.puppetlabs.com/browse/PUP-1916).

There may have been an option using the “puppet ca” command, but it has been deprecated and pretty much every documentation only mentions “puppet cert” nowadays.

You are left with these options:

- Manually figure out where the .csr file is stored on the server and remove it

- Use the deprecated tool “puppet ca destroy $certname”

- Run “puppet cert sign $certname && puppet cert clean $certname”

- Run “puppet cert sign $certname && puppet cert revoke $certname”

Some are unfortunately using the last two options.

There is the possibility that on some of those systems my certificate request was signed if only for a few seconds. Which brings us to the next issue:

2) Puppet server or in some cases the reverse proxy in front of it will only read the certificate revocation list at startup time.

If you don’t restart these services after revoking a certificate, it will still be allowed to connect.

“puppet cert revoke $certname” basically only adds the certificate to the CRL. It does not remove the signed certificate from the server.

I suspect that some operators have signed and revoked my certificate but haven’t restarted the service afterwards.

On the other hand “puppet cert clean $certname” will additionally remove the signed certificate from the server, when my client connects later it cannot get the signed certificate and is locked out.

This isn’t perfect either. If the client constantly requests the certificate it could retrieve it before the “clean” ran, but it is far better than only using “revoke”.

Depending on how you use Puppet, it may be one of your most critical systems containing the keys to your kingdom. There are very few cases where it makes sense to expose a Puppet server directly to the internet.

Those systems should be the best protected systems in your infrastructure, a compromised Puppet server essentially compromises all clients that are connecting to it.

Basic or naïve auto-signing should not be used in a production environment unless you can ensure that only trusted clients can reach the Puppet server. Only policy-based auto-signing or manual signing is secure otherwise.