Intro

Recently I stumbled over a site which publicly served their Dockerfile. That particular instance wasn’t very interesting. But I started to wonder how widespread this is and what sites are exposing due to that.

By all means, this isn’t exactly new. You can find /Dockerfile in the SecLists repository for a while.

However, it seems that so far nobody (publicly) investigated this. I’m also still operating a bunch of sites that are in the top 1 million list and I couldn’t find a single request for this file in my (limited) log files.

So I’ve started to do my own scan of the Alexa top 1 Million sites list.

This work was heavily inspired by the research of Hanno Böck in the past and in particular I used his wonderful tool snallygaster to conduct most of the scans. Thanks Hanno!

What is a Dockerfile?

A Dockerfile is the blueprint of a container. It contains all commands needed to build it. It is a simple plaintext file. You can tell Docker to copy files into the container, expose network ports and of course run any command during the build, for example:

FROM nginx COPY default.conf /etc/nginx/conf.d/default.conf COPY html/ /usr/share/nginx/html RUN echo "192.168.1.14 mysql" >> /etc/hosts EXPOSE 80

Basically you describe exactly how the container is configured, which packages are installed and what commands are being ran in the process of building it.

As you can see it doesn’t necessarily contain sensitive information. In the above example we don’t even see which files are copied to the NGINX document root.

Results

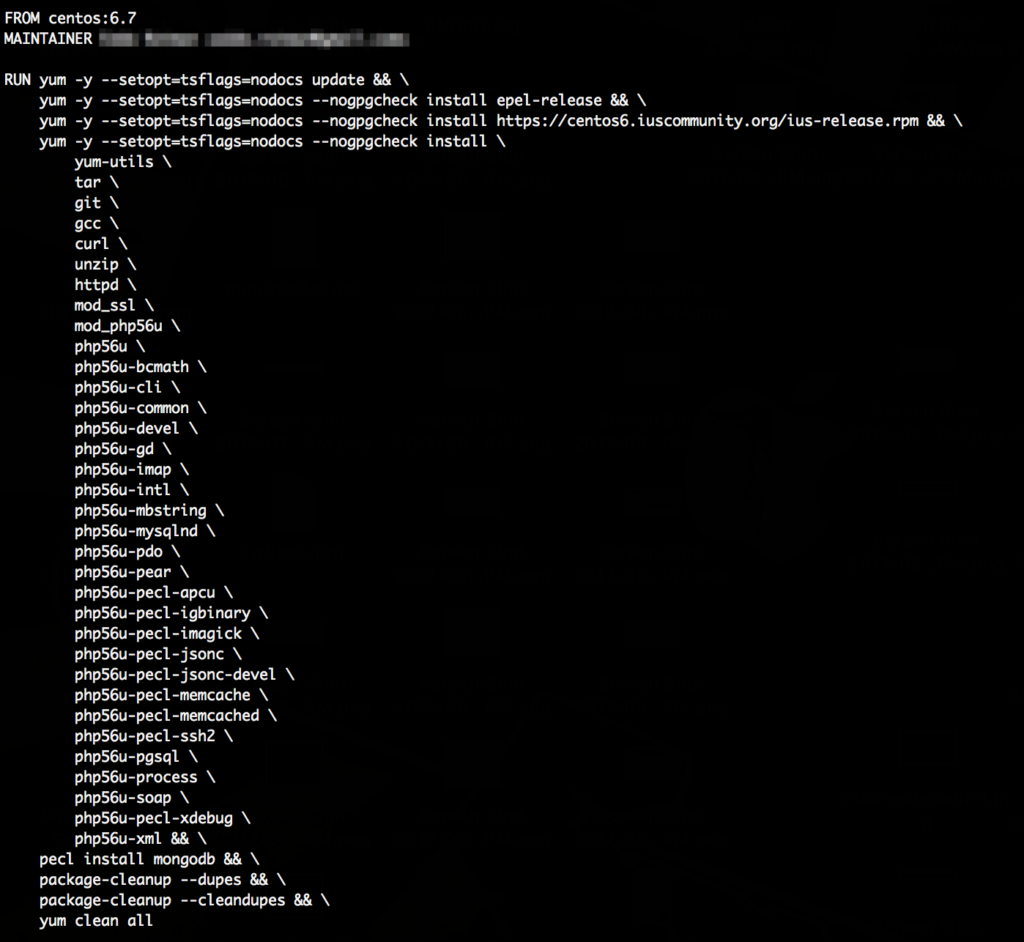

Out of the 1’000’000 sites 659 served a Dockerfile.

There is large reuse of existing Dockerfiles, one in particular was used 105 times.

Overall this boils down to 338 unique Dockerfiles being served.

41 were used two times or more, in detail:

The remaining 298 were uniquely used by only one site.

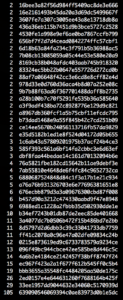

Most of them did fairly innocent operations that didn’t tell us much such as:

Not much there that we couldn’t also figure out by looking at the site directly.

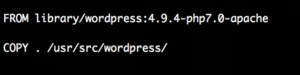

A lot of them gave us a very detailed view of what is probably running on the server, e.g.:

It’s nice to know exactly which PHP modules are used on the server, this might be useful in some cases.

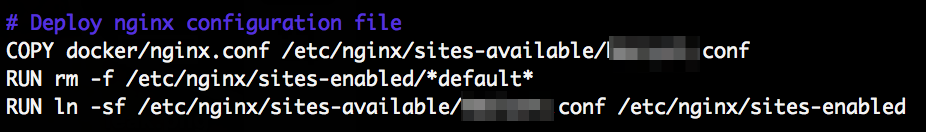

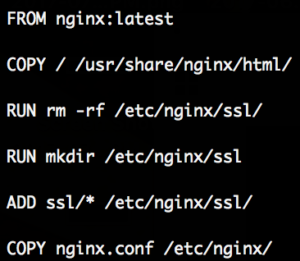

But as I dug deeper I found sometimes not only the Dockerfile was exposed but also much of the referenced configuration files. For example in the Dockerfile “docker/nginx.conf” is copied:

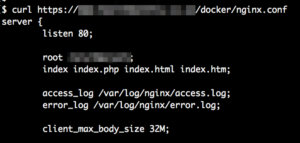

Which we then can simply try to access like this:

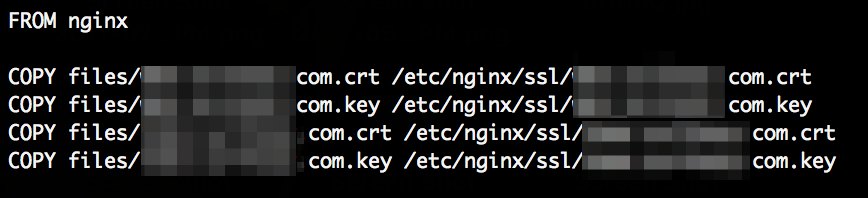

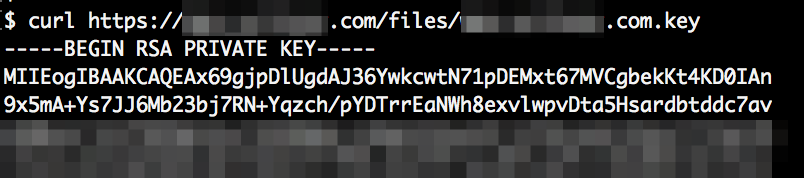

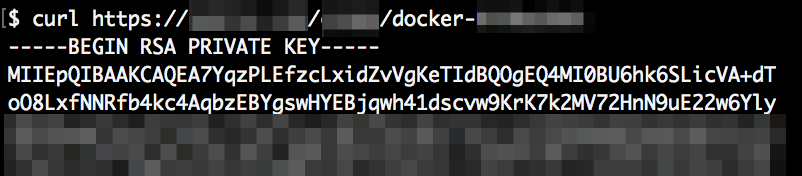

Somewhat common in that scenario are TLS certificates and, well, keys. I’ve found around 10 of those, for example:

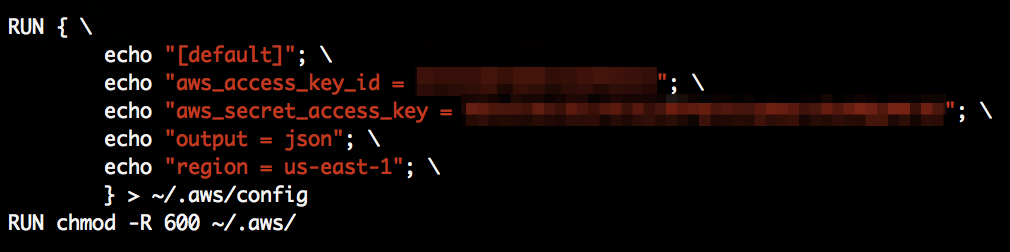

And some people simply do insane things in their Dockerfile, like exposing their AWS secret key:

Or using a tool called “sshpass” to pipe a password into ssh to automate a rsync:

![]()

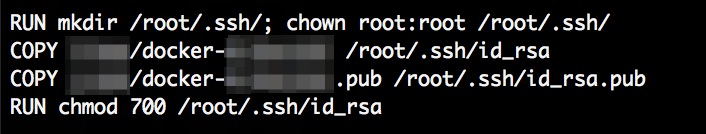

And at least one SSH root key is being served:

Overall I found SSH keys, npm tokens, TLS keys, passwords, AWS secrets, Amazon SES credentials, countless configuration files and source code of some of the applications.

These are of course the extreme examples which are to be expected on such a wide range scan.

How does this happen?

By default the Dockerfile is not copied into the container and certainly not to a publicly served folder.

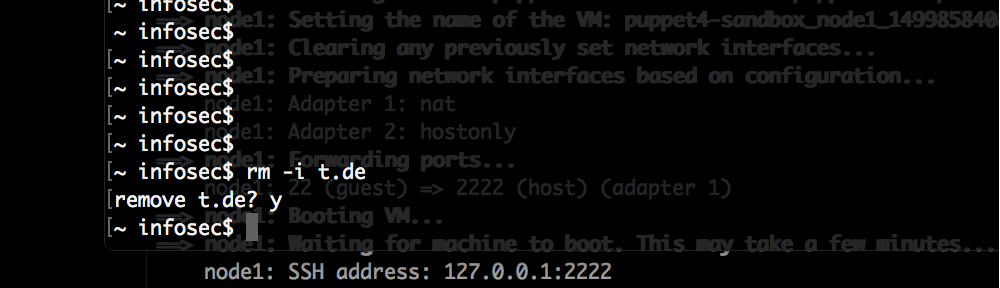

From what I can tell the mistake that most of these sites make is practically this (real example from the scan):

With the first COPY line they copy everything in the current folder to a publicly served folder.

Afterwards configuration files get copied.

With this both the nginx.conf and the complete ssl directory are public. We can now simply fetch the nginx.conf, lookup the name of the certificate and key files and then fetch those as well.

In some cases there was no such COPY command. I can only guess that the files ended up due to another mistake in the document root, possibly unrelated to Docker.

Conclusion

With only 0.066 % of sites exposing a Dockerfile this doesn’t look like a very widespread problem. And on top of that only a subset of those – less than 100 – expose really critical information that can lead to a compromise.

But in any case, it rarely makes sense to publicly serve a Dockerfile.

Even if you don’t include any keys, passwords or other secrets: It still doesn’t make sense to give everyone a blueprint of your system.

The sites that don’t expose anything critical right now might start in the future when changes are made to this seemingly private file.

It’s generally good advice – even if you don’t use Docker – to simply check your public webroot folder for any files that shouldn’t be there and remove them.